The server virtualization market is expected to grow to approximately 8 billion dollars by 2023. This would mean a compound annual growth rate (CAGR) of 7 percent between 2017 and 2023. Protection of these virtual environments is therefore a significant and critical component of an organization’s data protection strategy.

This growth in virtual environments necessitates that data protection be managed centrally, with better policies and reporting systems. However, in an environment consisting of heterogeneous applications, databases and operating systems, overall data protection can become a complex task.

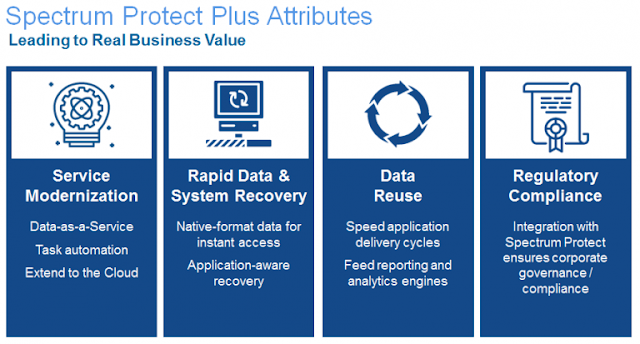

In my day-to-day meetings with clients from the automobile, retail, financial and other industries, the most common requirements for data protection include simplified management, better recovery time, data re-use and the ability to efficiently protect data for varied requirements such as testing and development, DevOps, reporting, analytics and so on.

I recently worked with a major automobile company in India that has a large setup of virtual machines. Management was looking for a disk-based, agentless data protection software that could protect its virtual and physical environments, one that was easy to deploy, could operate from a single window with better recovery times and provide reporting and analytics.

In its current environment, the company has three data centers. It was facing challenges with data protection management and disaster recovery. Backup agents were to be installed individually, which required expertise on the operating system, database and applications. A dedicated infrastructure was required at each site to manage the virtual environment and several other tools to perform granular recovery as needed. The company also had virtual tape libraries that were replicating data to other sites.

For the automobile company in India, IBM Systems Lab Services proposed a solution that would replace its current siloed and complex backup architecture with a simple IBM Spectrum Protect Plus solution. The proposed strategy included storing its short-term data on vSnap storage, which comes with IBM Spectrum Protect Plus, and storing long-term data to IBM Cloud Object Storage to reduce the backup storage costs. The multisite, geo-dispersed configuration of IBM Cloud Object Storage could help the auto company reduce the dependency of replication, which used to be there with virtual tape libraries.

Integration of IBM Spectrum Protect Plus with Spectrum Protect and offloading data to IBM Cloud Object Storage was proposed to the client to retire more expensive backup hardware such as virtual tape libraries from its data centers. This was all part of a roadmap to transform its backup environment.

IBM Spectrum Protect Plus is easy to install, eliminating the need for advanced technical expertise for backup software deployment. Its backup policy creation and scheduling features helped the auto company reduce its backup admin dependency. Its role-based access control (RBAC) feature enabled it to provide better bifurcation of its backup and restore workload between VM admins, backup admins, database administrators and so forth when it comes to its data protection environment. The ability to manage the data protection environment from a single place also helped the auto company to manage all three of its data centers from one location.

This growth in virtual environments necessitates that data protection be managed centrally, with better policies and reporting systems. However, in an environment consisting of heterogeneous applications, databases and operating systems, overall data protection can become a complex task.

In my day-to-day meetings with clients from the automobile, retail, financial and other industries, the most common requirements for data protection include simplified management, better recovery time, data re-use and the ability to efficiently protect data for varied requirements such as testing and development, DevOps, reporting, analytics and so on.

Data protection challenges for a major auto company

I recently worked with a major automobile company in India that has a large setup of virtual machines. Management was looking for a disk-based, agentless data protection software that could protect its virtual and physical environments, one that was easy to deploy, could operate from a single window with better recovery times and provide reporting and analytics.

In its current environment, the company has three data centers. It was facing challenges with data protection management and disaster recovery. Backup agents were to be installed individually, which required expertise on the operating system, database and applications. A dedicated infrastructure was required at each site to manage the virtual environment and several other tools to perform granular recovery as needed. The company also had virtual tape libraries that were replicating data to other sites.

The benefits of data protection and availability for virtual environments

For the automobile company in India, IBM Systems Lab Services proposed a solution that would replace its current siloed and complex backup architecture with a simple IBM Spectrum Protect Plus solution. The proposed strategy included storing its short-term data on vSnap storage, which comes with IBM Spectrum Protect Plus, and storing long-term data to IBM Cloud Object Storage to reduce the backup storage costs. The multisite, geo-dispersed configuration of IBM Cloud Object Storage could help the auto company reduce the dependency of replication, which used to be there with virtual tape libraries.

Integration of IBM Spectrum Protect Plus with Spectrum Protect and offloading data to IBM Cloud Object Storage was proposed to the client to retire more expensive backup hardware such as virtual tape libraries from its data centers. This was all part of a roadmap to transform its backup environment.

IBM Spectrum Protect Plus is easy to install, eliminating the need for advanced technical expertise for backup software deployment. Its backup policy creation and scheduling features helped the auto company reduce its backup admin dependency. Its role-based access control (RBAC) feature enabled it to provide better bifurcation of its backup and restore workload between VM admins, backup admins, database administrators and so forth when it comes to its data protection environment. The ability to manage the data protection environment from a single place also helped the auto company to manage all three of its data centers from one location.

This company can now take advantage of Spectrum Protect Plus to perform quick recovery of data during disaster scenarios and reduce its development cycle with clone creation from backup data in minutes or even seconds. Spectrum Protect Plus’s search and recovery of single-file functionality made the auto company’s need for granular recovery of files easy to address.

One of its major challenges was to improve performance and manage the protection of databases and applications. Spectrum Protect Plus resolved this with its unique feature of agentless backup of databases and applications running on physical and virtual environments. Spectrum Protect Plus also offers better reporting and analytics functionality, which allowed the client to send intuitive reports to the company’s top management.