Many of us in supply chain remember the major data breaches Target and Home Depot suffered within months of each other resulting from third-party relationships. Six years later, supply chain security breaches still make headlines with the average cost of a data breach now at $3.86 million and mega breaches (50 million records or more stolen) reaching $392 million.

So, what’s it going to take to tackle supply chain security?

Part of the challenge is that there is no single, functional definition of supply chain security. It’s a massively broad area that includes everything from physical threats to cyber threats, from protecting transactions to protecting systems, and from mitigating risk with parties in the immediate business network to mitigating risk derived from third, fourth and “n” party relationships. However, there is growing agreement that supply chain security requires a multifaceted and functionally coordinated approach.

Supply chain leaders tell us they are concerned about cyber threats, so in this blog, we are going to focus on the cybersecurity aspects to protecting the quality and delivery of products and services, and the associated data, processes and systems involved.

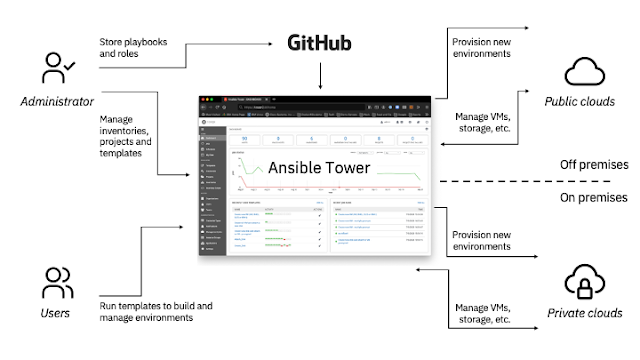

“Supply chain security is a multi-disciplinary problem, and requiresclose collaboration and execution between the business, customer support and IT organizations, which has its own challenges. The companies that get this rightstart with IT and a secure multi-enterprise business network, then build upwardwith carefully governed and secured access to analytics and visibility capabilities and,from there, continuously monitor every layer for anomalous behavior.” Marshall Lamb, CTO, IBM Sterling

Why is supply chain security important?

Supply chains are all about getting customers what they need at the right price, place and time. Any disruptions and risk to the integrity of the products or services being delivered, the privacy of the data being exchanged, and the completeness of associated transactions can have damaging operational, financial and brand consequences. Data breaches, ransomware attacks and malicious activities from insiders or attackers can occur at any tier of the supply chain. Even a security incident localized to a single vendor or third-party supplier, can still significantly disrupt the “plan, make and deliver” process.

Mitigating this risk is a moving target and mounting challenge. Supply chains are increasingly complex global networks comprised of large and growing volumes of third-party partners who need access to data and assurances they can control who sees that data. Today, new stress and constraints on staff and budget, and rapid unforeseen changes to strategy, partners and the supply and demand mix, add further challenges and urgency. At the same time, more knowledgeable and socially conscious customers and employees are demanding transparency and visibility into the products and services they buy or support. Every touchpoint adds an element of risk that needs to be assessed, managed and mitigated.

Top 5 supply chain security concerns

Supply chain leaders around the globe and across industries tell us these five supply chain security concerns keep them awake at night:

1. Data protection. Data is at the heart of business transactions and must be secured and controlled at rest and in motion to prevent breach and tampering. Secure data exchange also involves trusting the other source, be it a third party or an e-commerce website. Having assurances that the party you are interacting with is who they say they are is vital.

2. Data locality. Critical data exists at all tiers of the supply chain, and must be located, classified and protected no matter where it is. In highly regulated industries such as financial services and healthcare, data must be acquired, stored, managed, used and exchanged in compliance with industry standards and government mandates that vary based on the regions in which they operate.

3. Data visibility and governance. Multi-enterprise business networks not only facilitate the exchange of data between businesses, but also allow multiple enterprises access to data so they can view, share and collaborate. Participating enterprises demand control over the data and the ability to decide who to share it with and what each permissioned party can see.

4. Fraud prevention. In a single order-to-cash cycle, data changes hands numerous times, sometimes in paper format and sometimes electronic. Every point at which data is exchanged between parties or shifted within systems presents an opportunity for it to be tampered with – maliciously or inadvertently.

5. Third-party risk. Everyday products and services – from cell phones to automobiles – are increasing in sophistication. As a result, supply chains often rely on four or more tiers of suppliers to deliver finished goods. Each of these external parties can expose organizations to new risks based on their ability to properly manage their own vulnerabilities.

Supply chain security best practices

Supply chain security requires a multifaceted approach. There is no one panacea, but organizations can protect their supply chains with a combination of layered defenses. As teams focused on supply chain security make it more difficult for threat actors to run the gauntlet of security controls, they gain more time to detect nefarious activity and take action. Here are just a few of the most important strategies organizations are pursuing to manage and mitigate supply chain security risk.

◉ Security strategy assessments. To assess risk and compliance, you need to evaluate existing security governance – including data privacy, third-party risk and IT regulatory compliance needs and gaps – against business challenges, requirements and objectives. Security risk quantification, security program development, regulatory and standards compliance, and security education and training are key.

◉ Vulnerability mitigation and penetration testing. Identify basic security concerns first by running vulnerability scans. Fixing bad database configurations, poor password policies, eliminating default passwords and securing endpoints and networks can immediately reduce risk with minimal impact to productivity or downtime. Employ penetration test specialists to attempt to find vulnerabilities in all aspects of new and old applications, IT infrastructure underlying the supply chain and even people, through phishing simulation and red teaming.

◉ Digitization and modernization. It’s hard to secure data if you’re relying on paper, phone, fax and email for business transactions. Digitization of essential manual processes is key. Technology solutions that make it easy to switch from manual, paper-based processes and bring security, reliability and governance to transactions provide the foundation for secure data movement within the enterprise and with clients and trading partners. As you modernize business processes and software, you can take advantage of encryption, tokenization, data loss prevention, and file access monitoring and alerting, and bring teams and partners along with security awareness and training.

◉ Data identification and encryption. Data protection programs and policies should include the use of discovery and classification tools to pinpoint databases and files that contain protected customer information, financial data and proprietary records. Once data is located, using the latest standards and encryption policies protects data of all types, at rest and in motion – customer, financial, order, inventory, Internet of Things (IoT), health and more. Incoming connections are validated, and file content is scrutinized in real time. Digital signatures, multifactor authentication and session breaks offer additional controls when transacting over the internet.

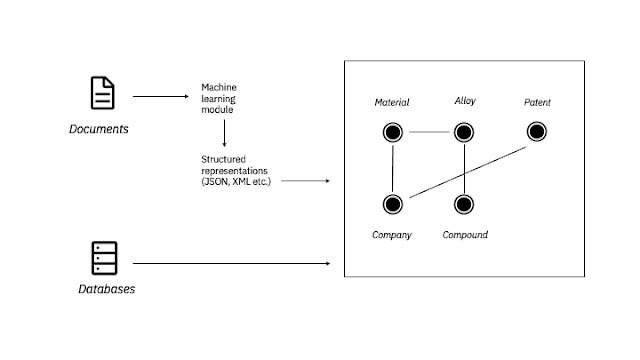

◉ Permissioned controls for data exchange and visibility. Multi-enterprise business networks ensure secure and reliable information exchange between strategic partners with tools for user- and role-based access. Identity and access management security practices are critical to securely share proprietary and sensitive data across a broad ecosystem, while finding and mitigating vulnerabilities lowers risk of improper access and breaches. Database activity monitoring, privileged user monitoring and alerting provide visibility to catch issues quickly. Adding blockchain technology to a multi-enterprise business network provides multi-party visibility of a permissioned, immutable shared record that fuels trust across the value chain.

◉ Trust, transparency and provenance. With a blockchain platform, once data is added to the ledger it cannot be manipulated, changed or deleted which helps prevent fraud and authenticate provenance and monitor product quality. Participants from multiple enterprises can track materials and products from source to end customer or consumer. All data is stored on blockchain ledgers, protected with the highest level of commercially available, tamper-resistant encryption.

◉ Third-party risk management. As connections and interdependencies between companies and third parties grow across the supply chain ecosystem, organizations need to expand their definition of vendor risk management to include end-to-end security. allows companies to assess, improve, monitor and manage risk throughout the life of the relationship. Start by bringing your own business and technical teams together with partners and vendors to identify critical assets and potential damage to business operations in the event of a compliance violation, system shutdown, or data breach that goes public.

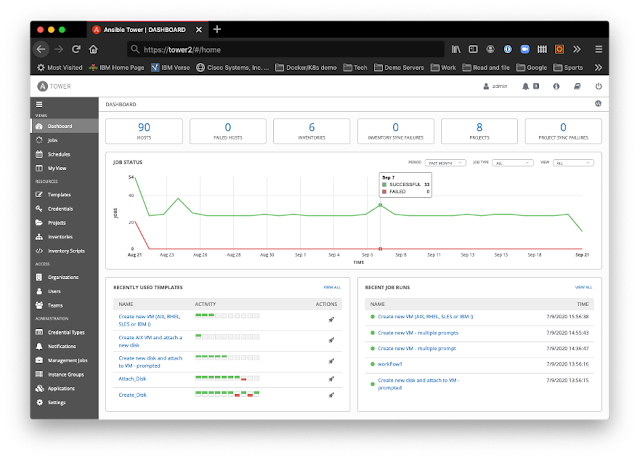

◉ Incident response planning and orchestration. Proactively preparing for a breach, shut down or disruption, and having a robust incident response plan in place is vital. Practiced, tested and easily executed response plans and remediation prevent loss of revenue, damage to reputation and partner and customer churn. Intelligence and plans provide metrics and learnings your organization and partners can use to make decisions to prevent attacks or incidents from occurring again.

Supply chain security will continue to get even smarter. As an example, solutions are beginning to incorporate AI to proactively detect suspicious behavior by identifying anomalies, patterns and trends that suggest unauthorized access. AI-powered solutions can send alerts for human response or automatically block attempts.

How are companies ensuring supply chain security today?

IBM Sterling Supply Chain Business Network set a new record in secure business transactions in September of this year, up 29% from September 2019, and is helping customers around the world rebuild their business with security and trust in spite of the global pandemic.

International money transfer service provider Western Union completed more than 800,000 million transactions in 2018 for consumer and business clients rapidly, reliably and securely with a trusted file transfer infrastructure.

Fashion retailer Eileen Fisher used an intelligent, omnichannel fulfillment platform to build a single pool of inventory across channels, improving trust in inventory data, executing more flexible fulfillment and reducing customer acquisition costs.

Blockchain ecosystem Farmer Connect transparently connects coffee growers to the consumers they serve, with a blockchain platform that incorporates network and data security to increase trust, safety and provenance.

Financial services provider Rosenthal & Rosenthal replaced its multiple approaches to electronic data interchange (EDI) services with a secure, cloud-based multi-enterprise business network reducing its operational costs while delivering a responsive, high-quality service to every client.

Integrated logistics provider VLI is meeting myriad government compliance and safety regulations, and allowing its 9,000 workers access to the right systems at the right time to do their jobs, with an integrated suite of security solutions that protect its business and assets and manage user access.

Solutions to keep your supply chain secure

Keep your most sensitive data safe, visible to only those you trust, immutable to prevent fraud and protected from third-party risk.

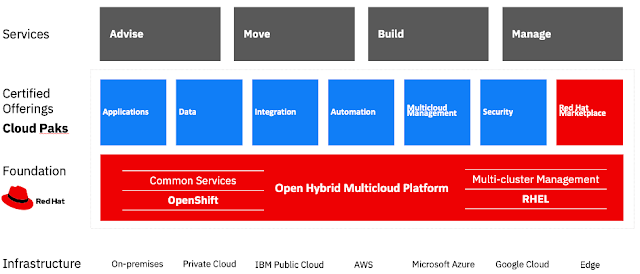

Let IBM help you protect your supply chain, with battle-tested security that works regardless of your implementation approach – on-premise, cloud or hybrid.

Source: ibm.com