Monday, 23 October 2023

How foundation models can help make steel and cement production more sustainable

Sunday, 8 May 2022

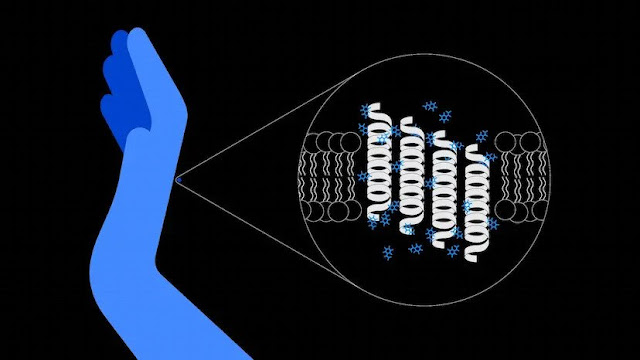

Computer simulations identify new ways to boost the skin’s natural protectors

Working with Unilever and the UK’s STFC Hartree Centre, IBM Research uncovered how skin can boost its natural defense against germs.

Simulating molecular interactions

A radical discovery process — and a map for hunting new bioactives

Sunday, 10 April 2022

What Is Quantum-Safe Cryptography, and Why Do We Need It?

How to prepare for the next era of computing with quantum-safe cryptography.

Cryptography helps to provide security for many everyday tasks. When you send an email, make an online purchase or make a withdrawal from an ATM, cryptography helps keep your data private and authenticate your identity.

Today’s modern cryptographic algorithms derive their strength from the difficulty of solving certain math problems using classical computers or the difficulty of searching for the right secret key or message. Quantum computers, however, work in a fundamentally different way. Solving a problem that might take millions of years on a classical computer could take hours or minutes on a sufficiently large quantum computer, which will have a significant impact on the encryption, hashing and public key algorithms we use today. This is where quantum-safe cryptography comes in.

According to ETSI, “Quantum-safe cryptography refers to efforts to identify algorithms that are resistant to attacks by both classical and quantum computers, to keep information assets secure even after a large-scale quantum computer has been built.”

What is quantum computing?

Quantum computers are not just more powerful supercomputers. Instead of computing with the traditional bit of a 1 or 0, quantum computers use quantum bits, or qubits (CUE-bits). A classical processor uses bits to perform its operations. A quantum computer uses qubits to run multidimensional quantum algorithms. Groups of qubits in superposition can create complex, multidimensional computational spaces. Complex problems can be represented in new ways in these spaces. This increases the number of computations performed and opens up new possibilities to solve challenging problems that classical computers can’t tackle.

There are many exciting applications in the fields of health and science, like molecular simulation that has the potential to speed up the discovery of new life-saving drugs. The problem is, however, quantum computers will also be able to solve the math problems that give many cryptographic algorithms their strength.

How will quantum computing impact cryptography?

Two of the main types of cryptographic algorithms in use today for the protection of data work in different ways:

◉ Symmetric algorithms use the same secret key to encrypt and decrypt data.

◉ Asymmetric algorithms, also known as public key algorithms, use two keys that are mathematically related: a public key and a private key.

The development of public key cryptography in the 1970s was revolutionary, enabling new ways of communicating securely. However, public key algorithms are vulnerable to quantum attacks because they derive their strength from the difficulty of solving the discrete log problem or factoring large integers. As discovered by mathematician Peter Shor, these types of problems can be solved very quickly using a sufficiently strong quantum computer, so in the case of asymmetric or public key cryptography, we need new math that will stand up to quantum attacks because today’s public key algorithms will be completely broken.

Grover’s Algorithm, devised by computer scientist Lov Grover, is a quantum search algorithm. Using Grover’s algorithm, some symmetric algorithms are impacted and some are broken. Key size and message digest size are important considerations that will factor into whether an algorithm is quantum-safe or not. For example, use of Advanced Encryption Standard (AES) with 256-bit keys is considered quantum-safe but Triple DES (TDES) can be broken no matter the key size.

What is being done to address future quantum threats?

The good news is that researchers and standards bodies are moving to address the threat. The National Institute of Standards and Technology (NIST) initiated a Post-Quantum Cryptography Standardization Program to identify new algorithms that can resist threats posed by quantum computers.

After three rounds of evaluation, NIST has identified seven finalists. They plan to select a small number of new quantum-safe algorithms early this year and have new quantum-safe standards in place by 2024. As part of this program, IBM researchers have been involved in the development of three quantum-safe cryptographic algorithms based on lattice cryptography that are in the final round of consideration: CRYSTALS-Kyber, CRYSTALS-Dilithium and Falcon.

How should enterprises be preparing to adopt quantum-safe cryptography?

Fortunately, we have time to implement quantum-safe solutions before the advent of large-scale quantum computers — but not much time. Moving to new cryptography is complex and will require significant time and investment. We don’t know when a large-scale quantum computer capable of breaking public key cryptographic algorithms will be available, but experts predict that this could be possible by the end of the decade.

Also, hackers can harvest encrypted data today and hold it for later when they can decrypt it using a quantum computer, so sensitive data with a long lifespan is already vulnerable. Organizations in the United States and Germany have already issued requirements for government agencies to follow regarding quantum-safe cryptography. BSI, a German federal agency, requires the use of hybrid schemes — where both classical and quantum-safe algorithms are used — for protection in high-security applications. The White House issued a memo requiring federal agencies to begin quantum-safe modernization planning.

How can IBM help?

As we prepare for a quantum world, IBM is committed to developing and deploying new quantum-safe cryptographic technology. Trusted hardware platforms will play a critical role in the adoption of quantum-safe cryptography. And IBM Z has already begun the modernization process. IBM z15 introduced lattice-based digital signatures within the system for digital signing of audit records within z/OS. IBM z15 also provided the ability for application developers to begin experimenting with quantum-safe lattice-based digital signatures. Because we’ve already begun the process, this helps us understand the implications of moving to new algorithms so we can pass on insights about the topic to our clients.

Preparing to adopt quantum-safe standards

When meeting with clients getting started on their journey to quantum safety, we share a few of the key milestones to help them get ready to adopt new quantum-safe standards:

◉ Discover and classify data: The first step involves classifying the value of your data and understanding compliance requirements. This helps you create a data inventory.

◉ Create a crypto inventory: Once you have classified your data, you will need to identify how your data is encrypted, as well as other uses of cryptography to create a crypto inventory that will help you during your migration planning. Your crypto inventory will include information like encryption protocols, symmetric and asymmetric algorithms, key lengths, crypto providers, etc.

◉ Embrace crypto agility: The transition to quantum-safe standards will be a multi-year journey as standards evolve and vendors move to adopt quantum-safe technology. Use a flexible approach and be prepared to make replacements. Implement a hybrid approach as recommended by industry experts by using both classical and quantum-safe cryptographic algorithms. This maintains compliance with current standards while adding quantum-safe protection.

Tuesday, 5 April 2022

The IBM Research innovations powering IBM z16

From 7 nanometer node chips to built-in AI acceleration and privacy, IBM Research was behind many of the groundbreaking aspects of the new IBM z16 system.

Scaling to 7 nm

A powerful chip with AI at its core

With Telum, it’s possible to detect fraud during the instant of a transaction. It’s possible to determine whether to extend someone a loan as quickly as they applied.

Finance houses can determine which trades were most at risk before settlement, and people could find out in an instant whether they’ve been approved for a loan.

Security for today and tomorrow

Tuesday, 14 December 2021

How AI will help code tomorrow’s machines

At NeurIPS 2021, IBM Research presents its work on CodeNet, a massive dataset of code samples and problems. We believe that it has the potential to revitalize techniques for modernizing legacy systems, helping developers write better code, and potentially even enabling AI systems to help code the computers of tomorrow.

Experiments on CodeNet

Further use cases for CodeNet

What differentiates CodeNet

How CodeNet is constructed

What’s next for CodeNet

Tuesday, 10 August 2021

IBM Research and Red Hat work together to take a load off predictive resource management

PayPal, the global payments giant, has already started putting load-aware scheduling into production.

The auto-scaler has just been made generally available and supported by Red Hat in OpenShift 4.8, and the load-aware scheduling is expected to be available in the next release of OpenShift.“Our collaboration with IBM Research takes an upstream-first approach, helping to fuel innovation in the Kubernetes community.

Saturday, 6 March 2021

Going beyond Women’s History Month: Celebrating & empowering women scientists

We kick off Women’s History Month and look forward to celebrating International Women’s Day on March 8. At IBM, we are committing to the theme of “Women Rising,” which has personal and professional resonance for me as a woman in what unfortunately continues to be predominantly male fields: science, technology, engineering, and math (STEM). In fact, although women account for half of the US’s college-educated workforce, they only make up 28 percent of the STEM workforce and 13 percent of inventors.

Read More: C2150-606: IBM Security Guardium V10.0 Administration

And while we have made progress in both hiring and representation for global women and underrepresented minorities in the US over the last three years at IBM, we know we can and must do more to ensure women feel empowered to be their authentic selves, to build their skills, and rise to their full potential.

At IBM Research, we value a diverse and inclusive workforce — and we recognize that we need women researchers and scientists more than ever to solve some of world’s greatest challenges. And we are facing an unprecedented magnitude of complex global issues that can threaten our future if left unchecked. For example, climate change, viruses impacting human health and livelihood, threats to our food supply, and widening inequality are just a few. Through IBM’s advancements — many of which were achieved by women — in hybrid cloud, artificial intelligence, quantum computing, and other science fields, we have a unique opportunity to make important contributions to society through our diverse teams.

In order to create a culture and climate within and outside of IBM Research that embraces, supports, and enables successful careers in STEM, we need to work together every day to hire, empower, and grow women in STEM fields.

The data is clear: Diverse teams are more innovative and solve problems faster. As part of my commitment to tackling the issue of empowering women in science, I’m proud to serve as one of the executive sponsors of the IBM Research Diversity & Inclusion Council, which is responsible for ensuring IBM Research is leveraging our innovation and influence to make meaningful changes in support of diversity, inclusion, and racial justice.

To celebrate Women’s History Month, we will spotlight women researchers and scientists from various disciplines, experiences, and tenures at IBM Research and share their insights about their role at IBM, career journeys, inspirations, and guidance for fellow women in STEM.

Source: ibm.com