Saturday, 29 April 2023

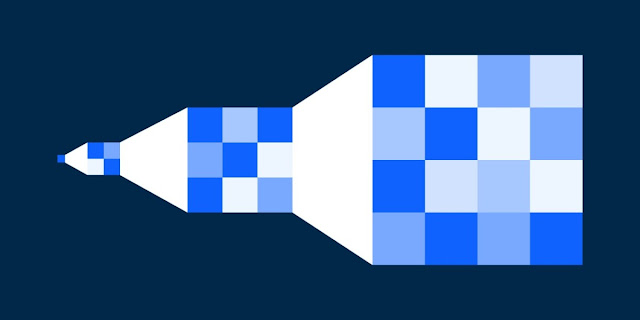

Why optimize your warehouse with a data lakehouse strategy

Friday, 28 April 2023

Cloud scalability: Scale-up vs. scale-out

Scale-up vs. Scale-out

What is scale-up (or vertical scaling)?

What is scale-out (or horizontal scaling)?

IBM Turbonomic and the upside of cloud scalability

Thursday, 27 April 2023

The Role of IBM C2090-619 Certification in a Cloud-based World

As the technology industry grows, certifications have become a crucial aspect of career development for IT professionals. The IBM C2090-619 certification exam is one such certification that focuses on IBM Informix 12.10 System Administrator. This article offers all the information about the IBM C2090-619 certification exam, including its benefits, exam format, and study resources.

IBM C2090-619 Certification Exam Format

The IBM C2090-619 certification exam evaluates the candidate's proficiency in IBM Informix 12.10 System Administration. The IBM C2090-619 certification exam is also known as the IBM Certified System Administrator - Informix 12.10 exam. The exam code for this certification is C2090-619. The cost to take this exam is $200 (USD). The exam lasts 90 minutes, and candidates will be presented with 70 multiple-choice questions. To pass the exam, candidates must obtain a minimum score of 68%. Here is a breakdown of the IBM C2090-619 certification exam content.

Installation and Configuration 17%

Space Management 11%

System Activity Monitoring and Troubleshooting 13%

Performance Tuning 16%

OAT and Database Scheduler 4%

Backup and Restore 10%

Replication and High Availability 16%

Warehousing 4%

Security 9%

Prerequisites for the Exam

To take the IBM C2090-619 Certification Exam, candidates must meet the following prerequisites.

1. Knowledge of SQL

Candidates should have a solid understanding of SQL (Structured Query Language) and be able to write basic SQL statements.

2. Knowledge of IBM DB2

Candidates should have experience working with IBM DB2 database software and be familiar with its features and functionality.

3. Basic knowledge of Linux

Candidates should have a basic understanding of Linux operating system commands and functionality.

4. Experience with IBM Data Studio

Candidates should be familiar with IBM Data Studio, which is a tool used to manage IBM databases.

5. Familiarity with data warehousing concepts

Candidates should have a basic understanding of data warehousing concepts such as data modeling, data integration, and ETL (Extract, Transform, Load) processes.

It is important to note that meeting these prerequisites does not guarantee success in passing the exam. Candidates should also have hands-on experience working with IBM DB2 database software and other related technologies. Additionally, it is suggested that candidates have experience working on real-world projects involving IBM DB2 and data warehousing to ensure they have a practical understanding of the exam topics.

Benefits of IBM C2090-619 Certification

The IBM C2090-619 certification is designed for professionals who work with IBM Informix 12.10 System Administrator. This certification exam helps professionals validate their expertise and knowledge in the field, enhancing their chances of career advancement. Here are some of the benefits of obtaining the IBM C2090-619 certification.

1. Validation of Expertise in IBM Informix 12.10 System Administration

The IBM Informix System Administrator Exam validates a candidate's proficiency and knowledge in IBM Informix 12.10 System Administration. This certification demonstrates that the candidate has the necessary skills and expertise to manage and maintain an IBM Informix 12.10 database system.

2. Increased Career Opportunities and Salary

Obtaining the IBM C2090-619 certification can increase a candidate's career opportunities and earning potential. Employers value certified experts and are more likely to offer promotions, salary increases, and better job opportunities to accredited candidates.

3. Enhancement of Professional Credibility

The IBM C2090-619 certification enhances a candidate's professional credibility in the IT industry. This certification demonstrates that the candidate is committed to their professional development and has invested time and effort in acquiring new skills and knowledge.

4. Access to a Community of Certified Professionals

Certified IBM Informix 12.10 System Administrators can access a community of certified professionals. This community provides a platform for networking, sharing knowledge, and collaborating on projects.

5. Opportunity to Work on Challenging Projects

The IBM C2090-619 certification can allow candidates to work on challenging projects. Certified professionals are often assigned to high-profile projects that require advanced skills and expertise in IBM Informix 12.10 System Administration.

Study Resources for IBM C2090-619 Certification Exam

The IBM Informix System Administrator Exam requires thorough preparation to achieve success. IBM offers various study resources to help candidates prepare for the exam, including.

1. IBM C2090-619 Exam Objectives

The IBM C2090-619 exam objectives outline the exam content and the skills required to pass the exam. Candidates should use this resource as the basis for their study plan.

2. IBM Knowledge Center

The IBM Knowledge Center is an online resource that provides comprehensive documentation on IBM products and solutions. It offers valuable information on IBM Informix 12.10 System Administration, which is the focus of the IBM Informix System Administrator Exam.

3. IBM Training Courses

IBM offers various training courses to help candidates prepare for the IBM Informix System Administrator Exam. These courses cover different topics related to IBM Informix 12.10 System Administration and are delivered online or in person.

4. Practice Tests

IBM provides practice tests that simulate the IBM Informix System Administrator Exam. These practice tests help candidates evaluate their readiness for the exam and identify areas that need improvement.

Conclusion

The IBM Informix System Administrator Exam is an excellent opportunity for professionals working with IBM Informix 12.10 System Administration to validate their skills and enhance their career prospects. With the proper preparation, candidates can pass the exam and gain the benefits of certification. This article overviews the IBM C2090-619 certification exam, including its uses, exam format, and study resources.

Tuesday, 25 April 2023

Why companies need to accelerate data warehousing solution modernization

Unexpected situations like the COVID-19 pandemic and the ongoing macroeconomic atmosphere are wake-up calls for companies worldwide to exponentially accelerate digital transformation. During the pandemic, when lockdowns and social-distancing restrictions transformed business operations, it quickly became apparent that digital innovation was vital to the survival of any organization.

The dependence on remote internet access for business, personal, and educational use elevated the data demand and boosted global data consumption. Additionally, the increase in online transactions and web traffic generated mountains of data. Enter the modernization of data warehousing solutions.

Companies realized that their legacy or enterprise data warehousing solutions could not manage the huge workload. Innovative organizations sought modern solutions to manage larger data capacities and attain secure storage solutions, helping them meet consumer demands. One of these advances included the accelerated adoption of modernized data warehousing technologies. Business success and the ability to remain competitive depended on it.

Why data warehousing is critical to a company’s success

Data warehousing is the secure electronic information storage by a company or organization. It creates a trove of historical data that can be retrieved, analyzed, and reported to provide insight or predictive analysis into an organization’s performance and operations.

Data warehousing solutions drive business efficiency, build future analysis and predictions, enhance productivity, and improve business success. These solutions categorize and convert data into readable dashboards that anyone in a company can analyze. Data is reported from one central repository, enabling management to draw more meaningful business insights and make faster, better decisions.

By running reports on historical data, a data warehouse can clarify what systems and processes are working and what methods need improvement. Data warehouse is the base architecture for artificial intelligence and machine learning (AI/ML) solutions as well.

Benefits of new data warehousing technology

Everything is data, regardless of whether it’s structured, semi-structured, or unstructured. Most of the enterprise or legacy data warehousing will support only structured data through relational database management system (RDBMS) databases. Companies require additional resources and people to process enterprise data. It is nearly impossible to achieve business efficiency and agility with legacy tools that create inefficiency and elevate costs.

Managing, storing, and processing data is critical to business efficiency and success. Modern data warehousing technology can handle all data forms. Significant developments in big data, cloud computing, and advanced analytics created the demand for the modern data warehouse.

Today’s data warehouses are different from antiquated single-stack warehouses. Instead of focusing primarily on data processing, as legacy or enterprise data warehouses did, the modern version is designed to store tremendous amounts of data from multiple sources in various formats and produce analysis to drive business decisions.

Data warehousing solutions

A superior solution for companies is the integration of existing on-premises data warehousing with data lakehouse solutions using data fabric and data mesh technology. Doing so creates a modern data warehousing solution for the long term.

A data lakehouse contains an organization’s data in a unstructured, structured, semi-structured form, which can be stored indefinitely for immediate or future use. This data is used by data scientists and engineers who study data to gain business insights. Data lake or data lakehouse storage costs are less expensive than a enterprise data warehouse. Further, data lakes and data lakehouse are less time-consuming to manage, which reduces operational costs. IBM has a next-generation data lakehouse solution to achieve these business situations.

Data fabric is the next-generation data analytics platform that solves advanced data security challenges through decentralized ownership. Typically, organizations have multiple data sources from different business lines that must be integrated for analytics. A data fabric architecture effectively unites disparate data sources and links them through centrally managed data sharing and governance guidelines.

Many enterprises seek a flexible, hybrid, and multi-cloud solution based on cloud providers. The data mesh solution pushes down the structured query language (SQL) queries to the related RDBMS or data lakehouse by managing the data catalog, giving users virtualized tables and data. In data mesh principles, it never stores business data locally, which is an advantage for a business. A successful data mesh solution will reduce a company’s capital and operational expenses.

IBM Cloud Pak for Data is an excellent example of a data fabric and data mesh solution for analytics. Cloud technology has emerged as the preferred platform for artificial intelligence (AI) capabilities, intelligent edge services, and advanced wireless connectivity and etc. Many companies will leverage a hybrid, multi-cloud strategy to improve business performance and success and thrive in the business world.

Best practices for adopting data warehousing technology

Data warehouse modernization includes extending the infrastructure without compromising security. This allows companies to reap the advantages of new technologies, inducing speed and agility in data processes, meeting changing business requirements, and staying relevant in this age of big data. The growing variety and volume of current data make it essential for businesses to modernize their data warehouses to remain competitive in today’s market. Businesses need valuable insights and reports in real-time and enterprise or legacy data warehouses cannot keep pace with modern data demands.

Data warehouses are at an exciting point of evolution. With the global data warehousing market size estimated to grow at a compound grow over 250% in next 5 years, companies will rely on new data warehouse solutions and tools that make them easier to use than ever before.

Cutting-edge technology to keep up with constant changes

AI and other breakthrough technologies will propel organizations into the next decade. Data consumption and load will continue to grow and provoke companies to discover new ways to implement state-of-the-art data warehousing solutions. The prevalence of digital technologies and connected devices will help organizations remain afloat, an unimaginable feat 20 years ago.

Essential lessons arise from an organization’s efforts to optimize its enterprise or legacy data warehousing technology. One vital lesson is the importance of making specific changes to modernize technology, processes, and organizational operations to evolve. As the rate of change will only continue to increase, this knowledge—and the capability to accelerate modernization—will be critical going forward.

No matter where you are at data warehouse modernization today, IBM experts are here to help modernize the right approach to fit your needs. It’s time to get started with your data warehouse modernization journey.

Source: ibm.com

.png)