Hybrid cloud could ultimately enable a new era of discovery, using the best resources available at the right times, no matter the size or complexity of the workload, to maximize performance and speed while maintaining security.

Crises like the COVID-19 pandemic, the need for new materials to address sustainability challenges, and the burgeoning effects of climate change demand more from science and technology than ever before. Organizations of all kinds are seeking solutions. With Project Photoresist, we showed how innovative technology can dramatically accelerate materials discovery by reducing the time it takes to find and synthesize a new molecule from years down to months. We believe even greater acceleration is possible with hybrid cloud.

Read More: C2090-600: IBM DB2 11.1 DBA for LUW

By further accelerating discovery with hybrid cloud, we may be able to answer other urgent questions. Can we design molecules to pull carbon dioxide out of smog? Could any of the drugs we’ve developed for other purposes help fight COVID-19? Where might the next pandemic-causing germ originate? Beyond the immediate impacts, how will these crises—and the ways we might respond—affect supply chains? Human resource management? Energy costs? Innovation?

Rather than guessing at solutions, we seek them through rigorous experimentation: asking questions, testing hypotheses, and analyzing the findings in context with all that we already know.

Increasingly, enterprises are seeing value in applying the same discovery-driven approach to build knowledge, inform decisions, and create opportunities within their businesses.

Fueling these pursuits across domains is a rich lode of computing power and resources. Data and artificial intelligence (AI) are being used in new ways. The limitless nature of the cloud is transforming high-performance computing, and advances in quantum and other infrastructure are enabling algorithmic innovations to achieve new levels of impact. By uniting these resources, hybrid cloud could offer a platform to accelerate and scale discovery to tackle the toughest challenges.

Take the problem of semiconductor chips and the pressing demands on their size, performance, and environmental safety. It can take years and millions of dollars to find better photoresist materials for manufacturing chips. With hybrid cloud, we are designing a new workflow for photoresist discovery that integrates deep search tools, data from thousands of papers and patents, AI-enriched simulation, high-performance computing systems and, in the future, quantum computers to build AI models that automatically suggest potential new photoresist materials. Human experts then evaluate the candidates, and their top choices are synthesized in AI-driven automated labs. In late 2020, we ran the process for the first time and produced a new candidate photoresist material 2–3x faster than before.

To scale these gains more broadly, we need to make it easier to build and execute workflows like this one. This means overcoming barriers on several fronts.

Today, jobs must be configured manually, which entails significant overhead. The data we need to analyze are often stored in multiple places and formats, requiring more time and effort to reconcile. Each component in a workflow has unique requirements and must be mapped to the right infrastructure. And the virtualized infrastructure we use may add latency.

We envision a new hybrid cloud platform where users can easily scale their jobs; access and govern data in multiple cloud environments; capitalize on more sophisticated scheduling approaches; and enjoy agility, performance, and security at scale. The technologies outlined in the following sections are illustrative of the kinds of full-stack innovation we believe will usher in these new workloads to the hybrid cloud era.

Reducing overhead with distributed, serverless computing

Scientists and developers alike should be able to define a job using their preferred language or framework, test their code locally, and run that job at whatever scale they require with minimal configuration. They need a simplified programming interface that enables distributed, serverless computing at scale.

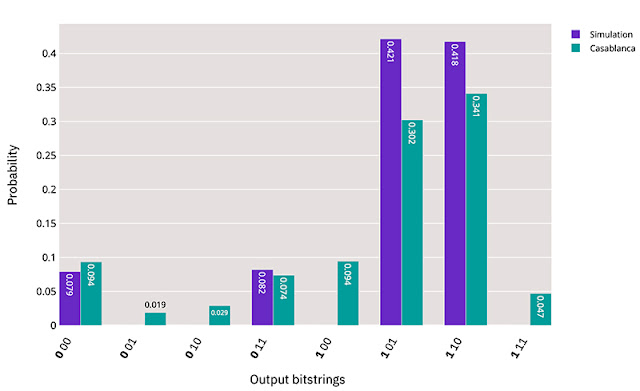

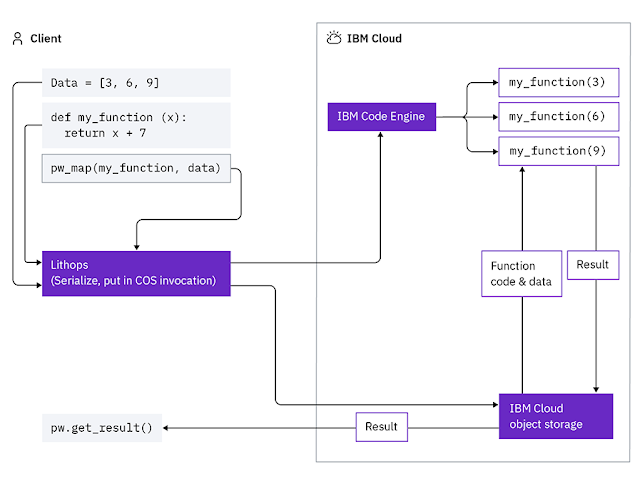

Many computationally intensive workloads involve large-scale data analytics, frequently using techniques like MapReduce. Data analytics engines like Spark and Hadoop have long been the tools of choice for these use cases, but they require significant user effort to configure and manage them. As shown in Figure 1, we are extending serverless computing to handle data analytics at scale with an open-source project called Lithops (Lightweight Optimized Serverless Processing) running on a serverless platform like IBM Code Engine. This approach lets users focus on defining the job they want to run in an intuitive manner while the underlying serverless platform executes: deploying it at massive scale, monitoring execution, returning results, and much more.

Figure 1. A serverless user experience for discovery in the hybrid cloud (with MapReduce example)

A team at the European Molecular Biology Laboratory recently used this combination to transform a key discovery workflow into the serverless paradigm. The team was looking to discover the role of small molecules or metabolites in health and disease and developed an approach to glean insights from tumor images. A key challenge they faced using Spark was the need to define in advance the resources they would need at every step. But by using a decentralized, serverless approach, with Lithops and what is now IBM Code Engine, the platform solved this problem for them by dynamically adjusting compute resources during data processing, which allows them to achieve greater scale and faster time to value. This solution enables them to process datasets that were previously out of reach, with no additional overhead, increasing the data processing pipeline by an estimated ~30x and speeding time to value by roughly 4–6x.

Meshing data without moving it

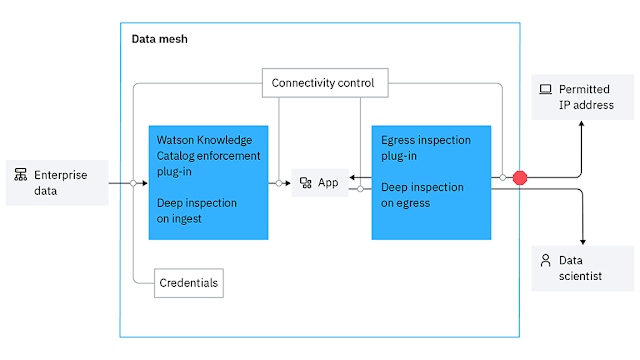

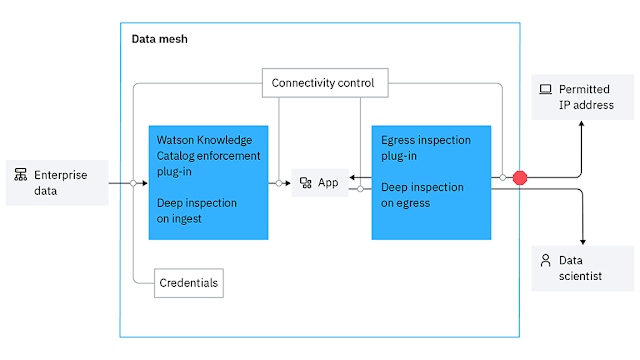

Organizations often leverage multiple data sources, scattered across various computing environments, to unearth the knowledge they need. Today, this usually entails copying all the relevant data to one location, which not only is resource-intensive but also introduces security and compliance concerns arising from the numerous copies of data being created and the lack of visibility and control over how it is used and stored. To speed discovery, we need a way to leverage fragmented data without copying it many times over.

To solve this problem, we took inspiration from the Istio open-source project, which devised mechanisms to connect, control, secure, and observe containers running in Kubernetes. This service mesh offers a simple way to achieve agility, security, and governance for containers at the same time. We applied similar concepts to unifying access and governance of data. The Mesh for Data open-source project allows applications to access information in various cloud environments—without formally copying it—and enables data security and governance policies to be enforced and updated in real time should the need arise (see Figure 2 for more detail). We open-sourced Mesh for Data in September 2020 and believe it is a promising approach to unify data access and governance across the siloed data estate in most organizations.

Figure 2. A unifying data fabric intermediates use and governance of enterprise data without copying it from its original location

Optimizing infrastructure and workflow management

Today’s

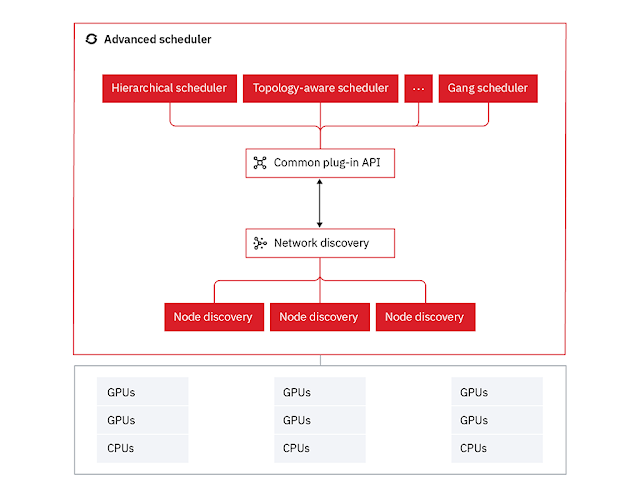

most advanced discovery workflows frequently include several complex components with unique requirements for compute intensity, data volume, persistence, security, hardware, and even physical location. For top performance, the components need to be mapped to infrastructure that accommodates their resource requirements and executed in close coordination. Some components may need to be scheduled “close by” in the data center (if significant communication is required) or scheduled in a group (e.g., in all-or-nothing mode). More sophisticated management of infrastructure and workflow can deliver cost-performance advantages to accelerate discovery.

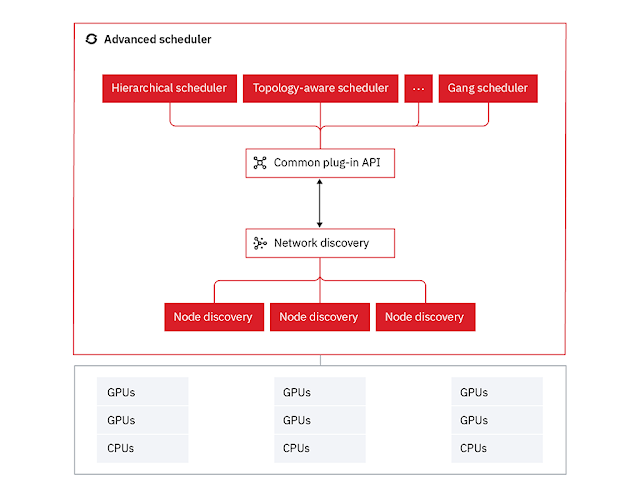

We have been innovating on scheduling algorithms and approaches for many years, including work on

adaptive bin packing and

topology-aware scheduling approaches. We also worked closely with the Lawrence Livermore National Laboratory, and more recently Red Hat, on the next-generation Flux scheduler, which has been used to run leading discovery workflows with more than 108 calculations per second.

Bringing capabilities like these to the Kubernetes world will truly enable a new era of discovery in the hybrid cloud. Although the Kubernetes scheduler was not designed or optimized for these use cases, it is flexible enough to be extended with new approaches. Scheduling innovations that address the complex infrastructure and coordination needs of discovery workflows are essential to delivering advances in performance and efficiency and, eventually, optimizing infrastructure utilization across cloud environments (see Figure 3).

Figure 3. Evolve Kubernetes scheduling for discovery workflows.

Lightweight but secure virtualization

Each task in a discovery workflow is ultimately run on virtualized infrastructure, such as virtual machines (VMs) or containers. Users want to spin up a virtualized environment, run the task, and then tear it down as fast as possible. But speed and performance are not the only criteria, especially for enterprise users: security matters, too. Enterprises often run their tasks inside of VMs for this reason. Whereas containers are far more lightweight, they are also considered less secure because they share certain functionality in the Linux kernel. VMs, on the other hand, do not share kernel functionality, so they are more secure but must carry with them everything that might be needed to run a task. This “baggage” makes them less agile than containers. We are on the hunt for more lightweight virtualization technology with the agility of containers and the security of VMs.

A first step in this direction is a technology called Kata containers, which we are exploring and optimizing in partnership with Red Hat. Kata containers are containers that run inside a VM that is trimmed down to remove code that is unlikely to be needed. We’ve demonstrated that this technology can reduce startup time by 4–17x, depending on the configuration. Most of the cuts come from “QEMU,” a part of the operating system responsible for emulating all the infrastructure the VM might need to complete a task. The key is to strike a balance between agility and generality, trimming as much as possible without compromising QEMU’s ability to run a task.

Bringing quantum to the hybrid cloud

Quantum computing can transform AI, chemistry, computational finance, optimization, and other domains. In our recently released

IBM Quantum Development Roadmap, we articulate a clear path to a future that can use cloud-native quantum services in applications such as discovery.

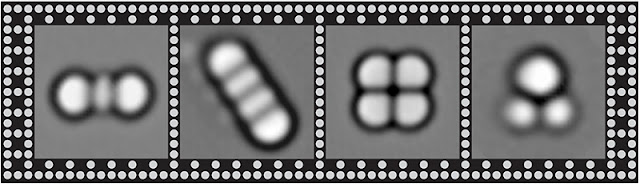

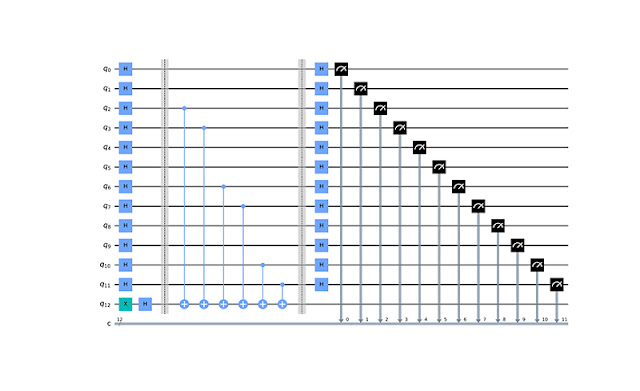

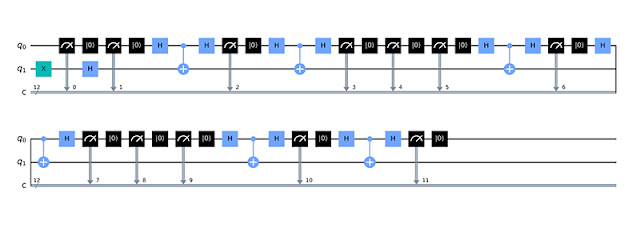

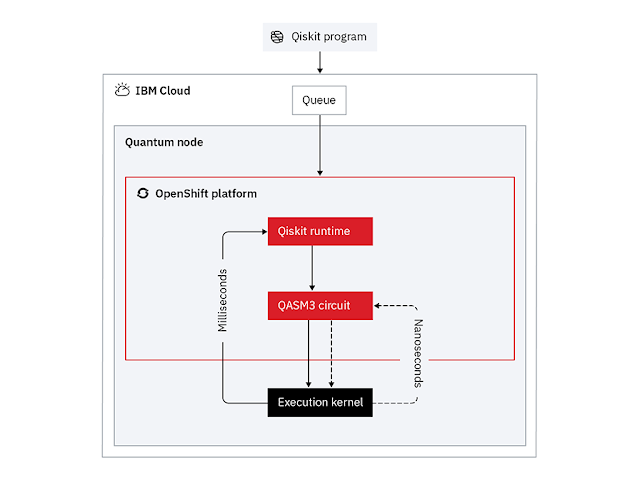

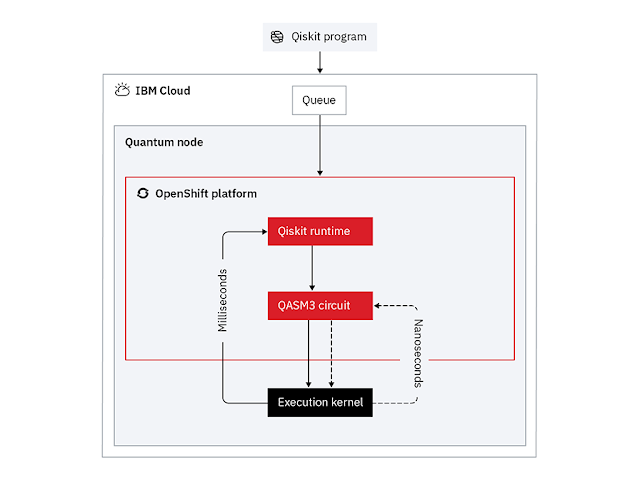

The basic unit of work for a quantum computer is a “circuit.” These are combined with classical cloud-based resources to implement new algorithms, reusable software libraries, and pre-built application services. IBM first put a quantum computer on the cloud in 2016, and since then, developers and clients have run over 700 billion circuits on our hardware.

In the Development Roadmap, we focus on three system attributes:

◉ Quality – How well are circuits implemented in quantum systems?

◉ Capacity – How fast can circuits run on quantum systems?

◉ Variety – What kind of circuits can developers implement for quantum systems?

We will deliver these through scaling our hardware and increasing Quantum Volume, as well as

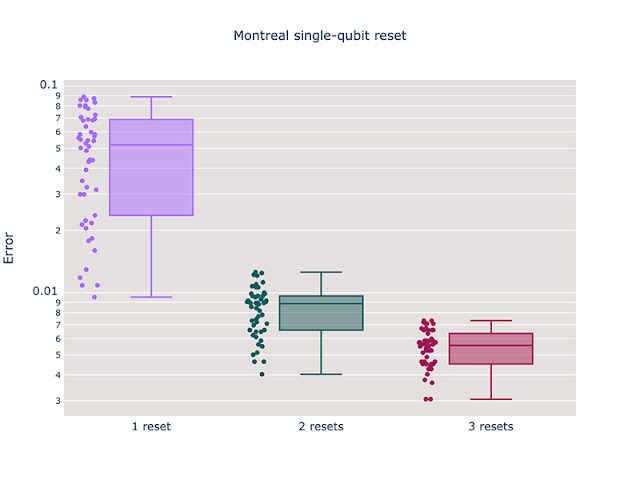

◉ The Qiskit Runtime to run quantum applications 100x faster on the IBM Cloud,

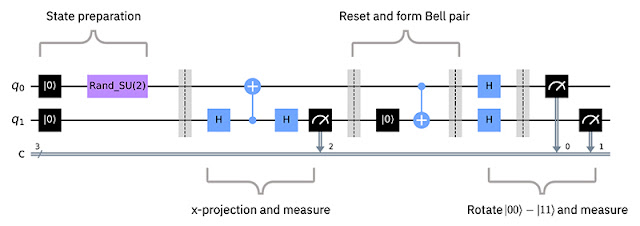

◉ Dynamic circuits to bring real-time classical computing to quantum circuits, improving accuracy and reducing required resources, and

◉ Qiskit application modules to lay the foundation for quantum model services and frictionless quantum workflows.

This roadmap and our work are driven by working closely with our clients. Their needs determine the application modules that the community and we are building. Those modules will require dynamic circuits and the Qiskit Runtime to solve their problems as quickly, accurately, and efficiently as possible. These will provide significant computational power and flexibility for discovery.

We envision a future of quantum computing that doesn’t require learning a new programming language and running code separately on a new device. Instead, we see a future with quantum easily integrated into a typical computing workflow, just like a graphics processors or any other external computing component. Any developer will be able to work seamlessly within the same integrated IBM Cloud-based framework. (See Figure 4.)

We call this vision frictionless quantum computing, and it’s a key element of our Development Roadmap.

Figure 4. Integration of quantum systems into the hybrid cloud.

Source: ibm.com