There are five DevOps trends that I believe will leave a mark in 2020. I’ll walk you through all five, plus some recommended next steps to take full advantage of these trends.

In 2019, Accenture’s disruptability index discovered that at least two-thirds of large organizations are facing high levels of industry disruption. Driven by pervasive technology change, organizations pursued new and more agile business models and new opportunities. Organizations that delivered applications and services faster were able to react more swiftly to those market changes and were better equipped to disrupt, rather than becoming disrupted. A

study by the DevOps Research and Assessment Group (DORA) shows that the best-performing teams deliver applications 46 times more frequently than the lowest performing teams. That means delivering value to customers every hour, rather than monthly or quarterly.

2020 will be the year of delivering software at speed and with high quality, but the big change will be the focus on strong DevOps governance. The desire to take a DevOps approach is the new normal. We are entering a new chapter that calls for DevOps scalability, for better ways to manage multiple tools and platforms, and for tighter IT alignment to the business. DevOps culture and tools are critical, but without strong governance, you can’t scale. To succeed, the business needs must be the driver. The end state, after all, is one where increased IT agility enables maximum business agility. To improve trust across formerly disconnected teams, common visibility and insights into the end-to-end pipeline will be needed by all DevOps stakeholders, including the business.

DevOps trends in 2020

What will be the enablers and catalysts in 2020 driving DevOps agility?

Prediction 1: DevOps champions will enable business innovation at scale. From leaders to practitioners, DevOps champions will coexist and share desires, concerns and requirements. This collaboration will include the following:

◉ A desire to speed the flow of software

◉ Concerns about the quality of releases, release management, and how quality activities impact the delivery lifecycle and customer expectations

◉ Continual optimization of the delivery process, including visualization and governance requirements

Prediction 2: More fragmentation of DevOps toolchains will motivate organizations to turn to value streams. 2020 will be the year of more DevOps for X, DevOps for Kubernetes, DevOps for clouds, DevOps for mobile, DevOps for databases, DevOps for SAP, etc. In the coming year, expect to see DevOps for anything involved in the production and delivery of software updates, application modernization, service delivery and integration. Developers, platform owners and site reliability engineers (SREs) will be given more control and visibility over the architectural and infrastructural components of the lifecycle. Governance will be established, and the growing set of stakeholders will get a positive return from having access and visibility to the delivery pipeline.

Figure 1: UrbanCode Velocity and its Value Stream Management screen enable full DevOps governance.

Prediction 3: Tekton will have a significant impact on cloud-native continuous delivery. Tekton is a set of shared open-source components for building continuous integration and continuous delivery systems. What if you were able to build, test and deploy apps to Kubernetes using an open source, vendor-neutral, Kubernetes-native framework? That’s the Tekton promise, under a framework of composable, declarative, reproducible and cloud-native principles. Tekton has a bright future now that it is strongly embraced by a large community of users along with organizations like Google, CloudBees, Red Hat and IBM.

Prediction 4: DevOps accelerators will make DevOps kings. In the search for holistic optimization, organizations will move from providing integrations, and move to creating sets of “best practices in a box.” These will deliver what is needed for systems to talk fluidly, but also remain auditable for compliance. These assets will become easier to discover, adopt and customize. Test assets that have been traditionally developed and maintained by software and system integrators will be provided by ambitious user communities, vendors, service providers, regulatory services and domain specialists.

Prediction 5: Artificial intelligence (AI) and machine learning in DevOps will go from marketing to reality. Tech giants, such as Google and IBM, will continue researching how to bring the benefits of DevOps to quantum computing, blockchain, AI, bots, 5G and edge technologies. They will also continue to look at how technologies can be used within the activities of continuous deployment, continuous software testing prediction, performance testing, and other parts of the DevOps pipeline. DevOps solutions will be able to detect, highlight, or act independently when opportunities for improvement or risk mitigation surface, from the moment an idea becomes a story until a story becomes a solution in the hands of their users.

Next steps

Companies embracing DevOps will need to carefully evaluate their current internal landscape, then prioritize next steps for DevOps success.

First, identify a DevOps champion to lead the efforts, beginning with automation. Establishing an automated and controlled path to production is the starting point for many DevOps transformations and one where leaders can show ROI clearly.

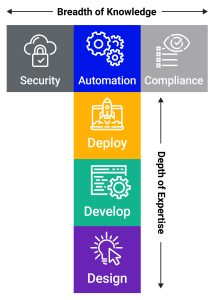

Then, the focus should turn toward scaling best practices across the enterprise and introducing governance and optimization. This includes reducing waste, optimizing flow and shifting security, quality and governance to the left. It also means increasing the frequency of complex releases by simplifying, digitizing and streamlining execution.

Figure 2: Scaling best practices across the enterprise.

These are big steps, so accelerate your DevOps journey by aligning with a long-term vision vendor that has a reputation of helping organizations navigate transformational journeys successfully. OVUM and Forrester have identified organizations that can help support your modernization in the following

OVUM report,

OVUM webinar and

Forrester report.