The approach of security as a choice

In your journey to cloud, there are many approaches to securing your workloads. One approach is very common across a variety of cloud vendors: You choose how yours is secured.

In a world where a breach of personal information can cost your enterprise millions of dollars in fines arising from regulatory non-compliance on top of the potential costs derived from loss of consumer confidence, inadequate security poses threats that cannot be taken lightly. The average cost of a data breach is $3.92 million, not to mention the impact to your reputation.

Data breaches, in particular massively impactful ones, are becoming more and more common. The largest factor in reducing the cost of a data breach is the use of encryption, which can render stolen data useless.

Don’t take me wrong, choice and flexibility are wonderful things to have when selecting a cloud provider. An approach which does not enforce the encryption of all data may work for non-sensitive, less critical workloads like DevTest. For workloads with sensitive data, there must be a better way to protect your business.

The approach of security at the core

Companies want to stay out of the news; they do not want publicized breaches and can help to address this by utilizing the highest security possible — by protecting business and customer information. Designed with secure engineering best practices in mind, the IBM Cloud™ Hyper Protect platform has layered security controls across network and infrastructure, which are designed to help you to protect your data from both internal and external threats.

IBM permits you to choose how content is secured. IBM Cloud™ Hyper Protect is engineered to enforce encryption by default for all data in transit and at rest. You do not have to decide whether or not you want your data encrypted and subsequently pay for and add on additional services to do so.

You do not have to choose to turn security on, it is always there — providing you 24x7x365 protection.

IBM Cloud™ Hyper Protect Services, in conjunction with strong operational security practices, are designed to help you secure your most valuable assets. IBM Cloud™ Hyper Protect Services are built upon a foundation of security and trust based on over 50 years of experience in enterprise computing. With Hyper Protect the client can choose who is entitled to view their content. All others are blocked from access. They have full sovereignty over their data.

How does it work?

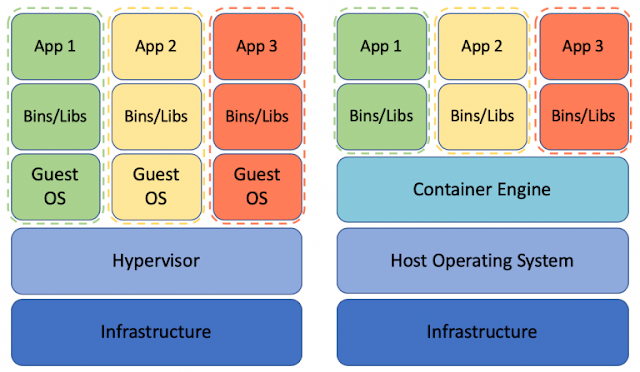

IBM Cloud™ Hyper Protect Services are built on top of the IBM Secure Service Container. The IBM Secure Service Container (SSC) is a specialized technology for installing and executing specific firmware or software appliances. These appliances host cloud workloads on IBM LinuxONE in the IBM Cloud™. Secure Service Container is designed to deliver:

◉ Tamper protection during installation time

◉ Restricted administrator access to help prevent the misuse of privileged user credentials

◉ Automatic encryption of data both in flight and at rest

In addition, Hyper Protect offerings are engineered to radically reduce the likelihood of internal breach by ensuring that:

◉ There is no system administrator access. This removes the potential for all sorts of intentional or unintentional data leakage

◉ Memory access from outside the Secure Service Container is disabled

◉ Storage volumes are encrypted

◉ Debug data (dumps) are encrypted

And, building on top of those, the external attack surface can also be radically reduced by:

◉ preventing OS-level access to the services (IBM or external). There is no shell access to the services

◉ Only secured remote APIs are available which helps prevent attackers from “fishing” around for vulnerabilities in the underlying infrastructure

What’s the key question?

“Do I need to secure my data?”

If the answer is ‘yes,’ then a suggested approach is to choose a platform that starts with security at its core by implementing encryption by default. One which removes access to the common attack vectors by cloud and application administrators as well as by external malicious actors.

Combined with robust secure engineering and operational strategies that incorporate static and dynamic code analysis, monitoring, threat intelligence and other security-related best practices, the IBM Cloud™ Hyper Protect portfolio of services can help advance your cloud and cloud security strategies to the next level.

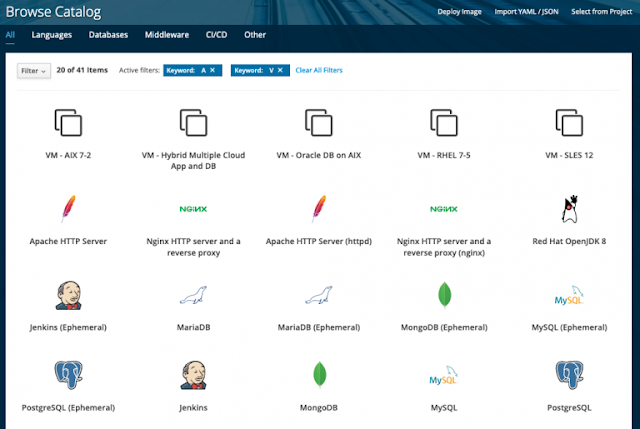

◉ Off the shelf, IBM Cloud™ Hyper Protect DBaaS provides data confidentiality for sensitive data stored in cloud native databases such as Postgres and MongoDB

◉ IBM Cloud™ Hyper Protect Crypto Services, built on the industry leading FIPS 140-2 Level 4 certified Hardware Security Module (HSM), is designed to provide exclusive control of your encryption keys and the entire key hierarchy including the HSM master key

◉ IBM Cloud™ Hyper Protect Virtual Servers , in beta as of October 2019, is designed for industry-leading workload protection

Source: ibm.com