IBM Cloud Virtual Private Cloud (VPC) is designed for secured cloud computing, and several features of our platform planning, development and operations help ensure that design. However, because security in the cloud is typically a shared responsibility between the cloud service provider and the customer, it’s essential for you to fully understand the layers of security that your workloads run on here with us. That’s why here, we detail a few key security components of IBM Cloud VPC that aim to provide secured computing for our virtual server customers.

Let’s start with the hypervisor

The hypervisor, a critical component of any virtual server infrastructure, is designed to provide a secure environment on which customer workloads and a cloud’s native services can run. The entirety of its stack—from hardware and firmware to system software and configuration—must be protected from external tampering. Firmware and hypervisor software are the lowest layers of modifiable code and are prime targets of supply chain attacks and other privileged threats. Kernel-mode rootkits (also known as bootkits) are a type of privileged threat and are difficult to uncover by endpoint protection systems, such as antivirus and endpoint detection and response (EDR) software. They run before any protection system with the ability to obscure their presence and thus hide themselves. In short, securing the supply chain itself is crucial.

IBM Cloud VPC implements a range of controls to help address the quality, integrity and supply chain of the hardware, firmware and software we deploy, including qualification and testing before deployment.

IBM Cloud VPC’s 3rd generation solutions leverage pervasive code signing to protect the integrity of the platform. Through this process, firmware is digitally signed at the point of origin and signatures are authenticated before installation. At system start-up, a platform security module then verifies the integrity of the system firmware image before initialization of the system processor. The firmware, in turn, authenticates the hypervisor, including device software, thus establishing the system’s root of trust in the platform security module hardware.

Device configuration and verification

IBM Cloud Virtual Servers for VPC provide a wide variety of profile options (vCPU + RAM + bandwidth provisioning bundles) to help meet customers’ different workload requirements. Each profile type is managed through a set of product specifications. These product specifications outline the physical hardware’s composition, the firmware’s composition and the configuration for the server. The software includes, but is not limited to, the host firmware and component devices. These product profiles are developed and overseen by a hardware leadership team and are versioned for use across our fleet of servers.

As new hardware and software assets are brought into our IBM Cloud VPC environment, they’re also mapped to a product specification, which outlines their intended configuration. The intake verification process then validates that the server’s actual physical composition matches that of the specification before its entry into the fleet. If there’s a physical composition that doesn’t match the specification, the server is cordoned off for inspection and remediation.

The intake verification process also verifies the firmware and hardware.

There are two dimensions of this verification:

1. Firmware is signed by an approved supplier before it can be installed on an IBM Cloud Virtual Server for VPC system. This helps ensure only approved firmware is applied to the servers. IBM Cloud works with several suppliers to help ensure firmware is signed and components are configured to reject unauthorized firmware.

2. Only firmware that is approved through the IBM Cloud governed specification qualifies for installation. The governed specification is updated cyclically to add newly qualified firmware versions and remove obsolete versions. This type of firmware verification is also performed as part of the server intake process and before any firmware update.

Server configuration is also managed through the governed product specifications. Certain solutions might need custom unified extensible firmware interface (UEFI) configurations, certain features enabled or restrictions put in place. The product specification manages the configurations, which are applied through automation on the servers. Servers are scanned by IBM Cloud’s monitoring and compliance framework at run time.

Specification versioning and promotion

As mentioned earlier, the core components of the IBM Cloud VPC virtual server management process are the product specifications. Product specifications are definition files that contain the configurations for all server profiles maintained and are reviewed by the corresponding IBM Cloud product leader and governance-focused leadership team. Together, they control and manage the server’s approved components, configuration and firmware levels to be applied. The governance-focused leadership team strives for commonality where needed, whereas the product leaders focus on providing value and market differentiation.

It’s important to remember that specifications don’t stand still. These definition files are living documents that evolve as new firmware levels are released or the server hardware grows to support extra vendor devices. Because of this, the IBM Cloud VPC specification process is versioned to capture changes throughout the server’s lifecycle. Each server deployment captures the version of the specification that it was deployed with and provides identification of the intended versus actual state as well.

Promotion of specifications is also necessary. When a specification is updated, it doesn’t necessarily mean it’s immediately effective across the production environments. Instead, it moves through the appropriate development, integration and preproduction (staging) channels before moving to production. Depending on the types of devices or fixes being addressed, there might even be a varying schedule for how quickly the rollout occurs.

Figure 1: IBM Cloud VPC specification promotion process

Firmware on IBM Cloud VPC is typically updated in waves. Where possible, it might be updated live, although some updates require downtime. Generally, this is unseen by our customers due to live migration. However, as the firmware updates roll through production, they can take time to move customers around. So, when a specification update is promoted through the pipeline, it then starts the update through the various runtime systems. The velocity of the update is generally dictated by the severity of the change.

How IBM Cloud VPC virtual servers set up a hardware root of trust

IBM Cloud Virtual Servers for VPC include root of trust hardware known as the platform security module. Among other functions, the platform security module hardware is designed to verify the authenticity and integrity of the platform firmware image before the main processor can boot. It verifies the image authenticity and signature using an approved certificate. The platform security module also stores copies of the platform firmware image. If the platform security module finds that the firmware image installed on the host was not signed with the approved certificate, the platform security module replaces it with one of its images before initializing the main processor.

Upon initialization of the main processor and loading of the system firmware, the firmware is then responsible for authenticating the hypervisor’s bootloader as part of a process known as secure boot, which aims to establish the next link in a chain of trust. The firmware verifies that the bootloader was signed using an authorized key before it was loaded. Keys are authorized when their corresponding public counterparts are enrolled in the server’s key database. Once the bootloader is cleared and loaded, it validates the kernel before the latter can run. Finally, the kernel validates all modules before they’re loaded onto the kernel. Any component that fails the validation is rejected, causing the system boot to halt.

The integration of secure boot with the platform security module aims to create a line of defense against the injection of unauthorized software through supply chain attacks or privileged operations on the server. Only approved firmware, bootloaders, kernels and kernel modules signed with IBM Cloud certificates and those of previously approved operating system suppliers can boot on IBM Cloud Virtual Servers for VPC.

The firmware configuration process described above includes the verification of firmware secure boot keys against the list of those initially approved. These consist of boot keys in the authorized keys database, the forbidden keys, the exchange key and the platform key.

Secure boot also includes a provision to enroll additional kernel and kernel module signing keys into the first stage bootloader (shim), also known as the machine owner key (mok). Therefore, IBM Cloud’s operating system configuration process is also designed so that only approved keys are enrolled in the mok facility.

Once a server passes all qualifications and is approved to boot, an audit chain is established that’s rooted in the hardware of the platform security module and extends to modules loaded into the kernel.

Figure 2: IBM Cloud VPC secure boot audit chain

How do I use verified hypervisors on IBM Cloud VPC virtual servers?

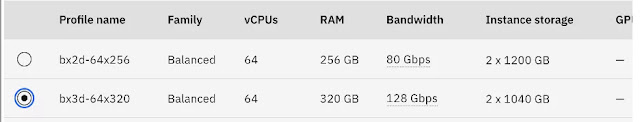

Good question. Hypervisor verification is on by default for supported IBM Cloud Virtual Servers for VPC. Choose a generation 3 virtual server profile (such as bx3d, cx3d, mx3d or gx3), as shown below, to help ensure your virtual server instances run on hypervisor-verified supported servers. These capabilities are readily available as part of existing offerings and customers can take advantage by deploying virtual servers with a generation 3 server profile.

Figure 3: IBM Cloud Virtual Servers for VPC, Generation 3

IBM Cloud continues to evolve its security architecture and enhances it by introducing new features and capabilities to help support our customers.

Source: ibm.com