In the realm of software development, efficiency and innovation are of paramount importance. As businesses strive to deliver cutting-edge solutions at an unprecedented pace, generative AI is poised to transform every stage of the software development lifecycle (SDLC).

A McKinsey study shows that software developers can complete coding tasks up to twice as fast with generative AI. From use case creation to test script generation, generative AI offers a streamlined approach that accelerates development, while maintaining quality. This ground-breaking technology is revolutionizing software development and offering tangible benefits for businesses and enterprises.

Bottlenecks in the software development lifecycle

Traditionally, software development involves a series of time-consuming and resource-intensive tasks. For instance, creating use cases require meticulous planning and documentation, often involving multiple stakeholders and iterations. Designing data models and generating Entity-Relationship Diagrams (ERDs) demand significant effort and expertise. Moreover, techno-functional consultants with specialized expertise need to be onboarded to translate the business requirements (for example, converting use cases into process interactions in the form of sequence diagrams).

Once the architecture is defined, translating it into backend Java Spring Boot code adds another layer of complexity. Developers must write and debug code, a process that is prone to errors and delays. Crafting frontend UI mock-ups involves extensive design work, often requiring specialized skills and tools.

Testing further compounds these challenges. Writing test cases and scripts manually is laborious and maintaining test coverage across evolving codebases is a persistent challenge. As a result, software development cycles can be prolonged, hindering time-to-market and increasing costs.

In summary, traditional SDLC can be riddled with inefficiencies. Here are some common pain points:

- Time-consuming Tasks: Creating use cases, data models, Entity Relationship Diagrams (ERDs), sequence diagrams and test scenarios and test cases creation often involve repetitive, manual work.

- Inconsistent documentation: Documentation can be scattered and outdated, leading to confusion and rework.

- Limited developer resources: Highly skilled developers are in high demand and repetitive tasks can drain their time and focus.

The new approach: IBM watsonx to the rescue

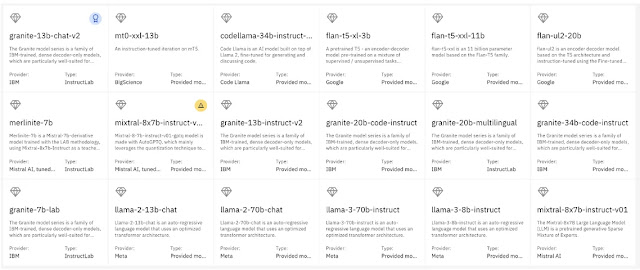

Tata Consultancy Services, in partnership with IBM®, developed a point of view that incorporates IBM watsonx™. It can automate many tedious tasks and empower developers to focus on innovation. Features include:

- Use case creation: Users can describe a desired feature in natural language, then watsonx analyses the input and drafts comprehensive use cases to save valuable time.

- Data model creation: Based on use cases and user stories, watsonx can generate robust data models representing the software’s data structure.

- ERD generation: The data model can be automatically translated into a visual ERD, providing a clear picture of the relationships between entities.

- DDL script generation: Once the ERD is defined, watsonx can generate the DDL scripts for creating the database.

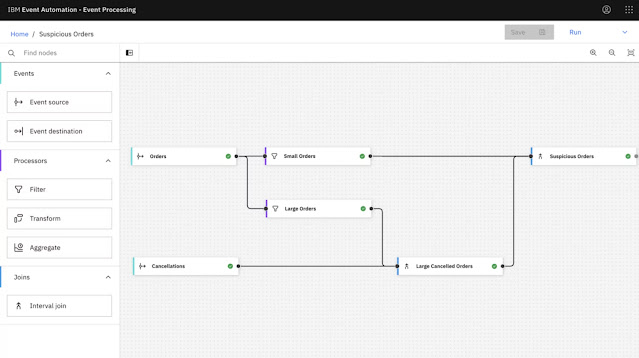

- Sequence diagram generation: watsonx can automatically generate the visual representation of the process interactions of a use case and data models, providing a clear understanding of the business process.

- Back-end code generation: watsonx can translate data models and use cases into functional back-end code, like Java Springboot. This doesn’t eliminate developers, but allows them to focus on complex logic and optimization.

- Front-end UI mock-up generation: watsonx can analyze user stories and data models to generate mock-ups of the software’s user interface (UI). These mock-ups help visualize the application and gather early feedback.

- Test case and script generation: watsonx can analyse code and use cases to create automated test cases and scripts, thereby boosting software quality.

Efficiency, speed, and cost savings

All of these watsonx automations lead to benefits, such as:

- Increased developer productivity: By automating repetitive tasks, watsonx frees up developers’ time for creative problem-solving and innovation.

- Accelerated time-to-market: With streamlined processes and automated tasks, businesses can get their software to market quicker, capitalizing on new opportunities.

- Reduced costs: Less manual work translates to lower development costs. Additionally, catching bugs early with watsonx-powered testing saves time and resources.

Embracing the future of software development

TCS and IBM believe that generative AI is not here to replace developers, but to empower them. By automating the mundane tasks and generating artifacts throughout the SDLC, watsonx paves the way for faster, more efficient and more cost-effective software development. Embracing platforms like IBM watsonx is not just about adopting new technology, it’s about unlocking the full potential of efficient software development in a digital age.

Source: ibm.com