Customer-centricity is the heart of every successful retailer. But as customers’ expectations quickly evolve, retailers are challenged with increasing sales and getting product out the door as quickly as possible – sometimes foregoing profitability and efficiency. As a result, a strategy initially designed to put the customer first, can often lead to over-promising and result in disappointed shoppers facing late deliveries or cancelled orders.

Read More: C2150-606: IBM Security Guardium V10.0 Administration

Fortunately, it is possible to make promises that are reliable and profitable. And there’s no time to waste.

Why now?

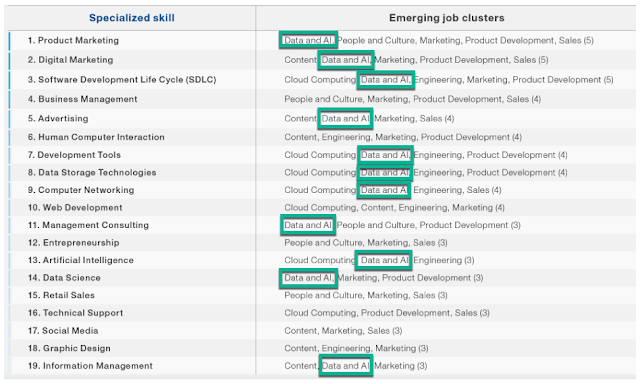

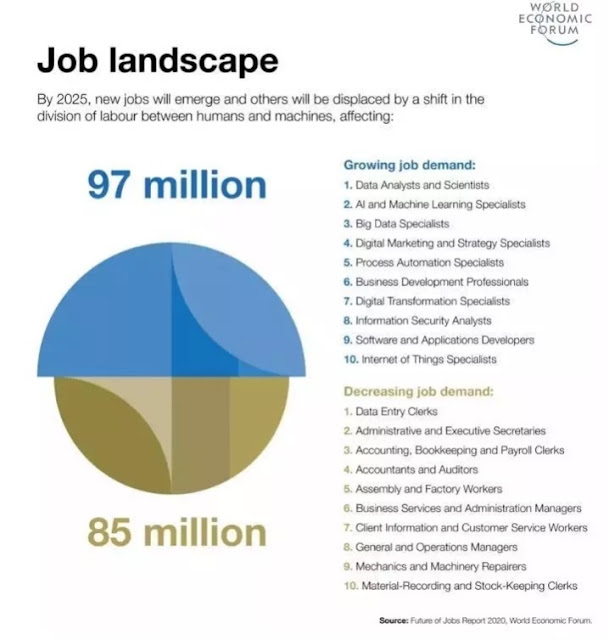

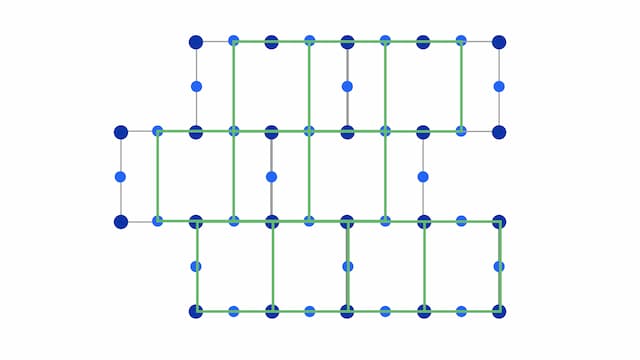

Retailers have prioritized new omnichannel operations to meet customers’ needs, with just over 60% of retailers reporting that they are currently implementing curbside pick-up or BOPIS solutions, or planning to in the next 12 months. But in the rush to introduce seamless customer experiences and manage omnichannel complexity, many retailers haven’t considered their approach to promising and how it directly impacts customer experiences. In fact, 35% of retailers don’t have a well-defined omnichannel fulfillment strategy in place. Currently, retailers rely on what the system says is available for inventory and then calculate the shipping time to present a promise date. But without real-time inventory visibility, they can’t see what is actually available before they promise it to customers. Aside from inventory, there are many other nuances to consider before retailers can make profitable and accurate promises — labor and throughput capacity to prepare orders, node and carrier schedules, as well as cost factors and constraints.

Every retailer wants to increase sales and get products into shoppers’ hands faster. However, if you can’t do this in a way that is efficient and reliable for customers, revenue and profitability suffer in the long term. Visibility and trust throughout the process are critical to every shopper experience. Customers often face disappointment when inventory they believed to be in stock is really unavailable or backordered at checkout. In fact, roughly 47% of shoppers will shop elsewhere if they cannot see inventory availability before they buy. Providing customers with early into estimated delivery dates is also critical; nearly 50% of shoppers would cancel their cart due to a mismatch between their expectations for the delivery date and the actual delivery date.

What’s changed?

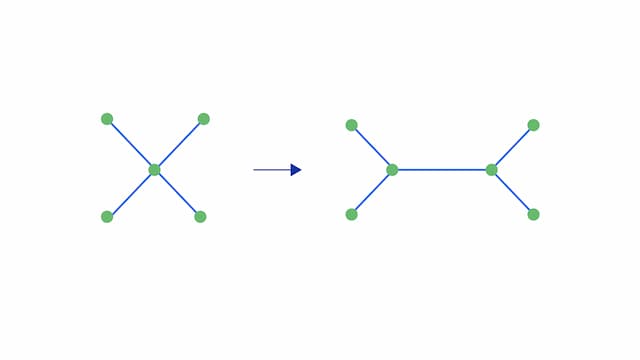

Retailers need a modern solution that brings together real-time inventory visibility and advanced fulfillment logic to balance profitability and customer experience. That’s why we are proud to announce IBM Sterling Intelligent Promising, a solution designed to empower retailers with advanced inventory promising for next-generation omnichannel experiences, from discovery to delivery. Retailers can preserve brand trust by providing shoppers with greater certainty, choice, and transparency across their buying journey. And, this solution empowers retailers to increase digital conversions and in-store sales while achieving near-perfect delivery time SLA compliance, a reduction in split packages and shipping costs, and an improvement in inventory turns.

What’s the impact?

IBM Sterling Intelligent Promising is your superpower to enhance the shopping experience by improving certainty, choice and transparency across the customer journey.

➥ Certainty. With real-time inventory visibility and advanced, cost-based optimization, you can deliver accurate promise dates throughout the order process – on the product list page, product details page and during checkout – so that customers know exactly when an order is arriving, with no surprises. When customers can see what is truly available with reliable delivery dates, retailers can reduce order cancellations, improve cart conversion, and feel confident about their bottom line.

➥ Choice. Empower shoppers to find and filter by store and products based on availability, delivery date and fulfillment method. Make enterprise-wide inventory available to promise based on easy to configure business rules, enabling a faster delivery experience with more options for customers.

➥ Transparency. Provide clarity when items are low on inventory or backordered. With inventory intelligence and data from carriers and other processing and fulfillment factors,, retailers can manage pre-purchase expectations for any uncertainty around on time, in full delivery due to potential supply chain disruptions.

Improve digital and in-store conversions. If shoppers can’t see available inventory online, they’ll shop elsewhere. Improve conversion by providing an accurate inventory view up-front. Go the extra mile by proactively suggesting related products as an upsell opportunity without changing shipping, labor costs or delivery dates. Optimization logic influences promising across the order journey and supports even the most complex scenarios such as orders that include third-party services (like furniture assembly) and multi-brand orders.

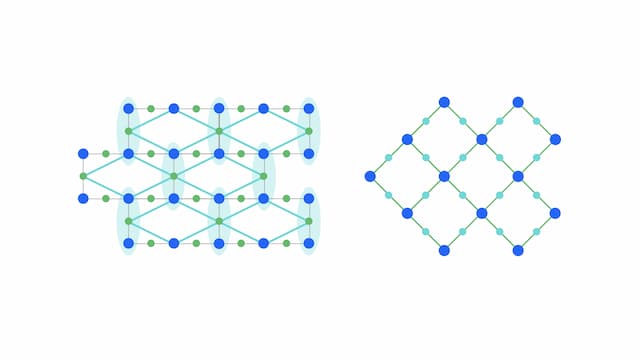

Increase omnichannel revenue and profitability. As shopper expectations rise and omnichannel becomes increasingly complex, retailers need to be able to optimize fulfillment at scale – across thousands of fulfillment permutations in milliseconds. Advanced analytics and Artificial Intelligence (AI) are essential to drive multi-objective optimization against configured business goals. Detailed “decision explainers” ensure that business users trust the recommendations, driving continuous learning and improving adoption. With this foundation in place, start making promises you can keep, while improving sales and profitability.

Early in the customer journey, prioritize business objectives like profitability and customer satisfaction while considering different fulfillment factors (distance, costs, capacity, schedules, special handling requirements) so you can determine the lowest cost for each customer in seconds. For increased accuracy, utilize aggregated data from carriers like shipping rates, transit times, and even cross border fees. Post-purchase, optimize cost-to-serve by combining shipping costs directly from carrier management providers and capacity across locations and resource pools, with predefined rules and business drivers. Use AI capabilities to predict sell-through patterns and forecast demand based on seasonality, reducing stockouts and markdowns to maximize revenue and improve margins.

As omnichannel continues to mature, make promises that are reliable and profitable. Start creating next-generation omnichannel experiences with sophisticated inventory promising. Get more details on how IBM Sterling Intelligent Promising can help you enhance the shopping experience and improve digital and in-store sales while increasing omnichannel conversions profitability.

Source: ibm.com