Thursday, 30 November 2023

How blockchain enables trust in water trading

Thursday, 23 November 2023

Level up your Kafka applications with schemas

What is a schema?

Why should you use a schema?

What is a schema registry?

Optimize your Kafka environment by using a schema registry.

- Forward Compatibility: where the producing applications can be updated to a new version of the schema, and all consuming applications will be able to continue to consume messages while waiting to be migrated to the new version.

- Backward Compatibility: where consuming applications can be migrated to a new version of the schema first, and are able to continue to consume messages produced in the old format while producing applications are migrated.

- Full Compatibility: when schemas are both forward and backward compatible.

What’s next?

Tuesday, 21 November 2023

Asset lifecycle management strategy: What’s the best approach for your business?

What is an asset?

What is asset lifecycle management?

- Planning: In the first stage of the asset lifecycle, stakeholders assess the need for the asset, its projected value to the organization and its overall cost.

- Valuation: A critical part of the planning stage is assessing the overall value of an asset. Decision-makers must take into account many different pieces of information in order to gauge this, including the assets likely length of useful life, its projected performance over time and the cost of disposing of it. One technique that is becoming increasingly valuable during this stage is the creation of a digital twin.

- Digital twin technology: A digital twin is a virtual representation of an asset a company intends to acquire that assists organizations in their decision-making process. Digital twins allow companies to run tests and predict performance based on simulations. With a good digital twin, its possible to predict how well an asset will perform under the conditions it will be subjected to.

- Procurement and installation: The next stage of the asset lifecycle concerns the purchase, transportation and installation of the asset. During this stage, operators will need to consider a number of factors, including how well the new asset is expected to perform within the overall ecosystem of the business, how its data will be shared and incorporated into business decisions, and how it will be put into operation and integrated with other assets the company owns.

- Utilization: This phase is critical to maximizing asset performance over time and extending its lifespan. Recently, enterprise asset management systems (EAMs) have become an indispensable tool in helping businesses perform predictive and preventive maintenance so they can keep assets running longer and generating more value. We’ll go deeper into EAMs, the technologies underpinning them and their implications for asset lifecycle management strategy in another section.

- Decommissioning: The final stage of the asset lifecycle is the decommissioning of the asset. Valuable assets can be complex and markets are always shifting, so during this phase, it’s important to weigh the depreciation of the current asset against the rising cost of maintaining it in order to calculate its overall ROI. Decision-makers will want to take into consideration a variety of factors when attempting to measure this, including asset uptime, projected lifespan and the shifting costs of fuel and spare parts.

The benefits of asset lifecycle management strategy

- Scalability of best practices: Today’s asset lifecycle management strategies use cutting-edge technologies coupled with rigorous, systematic approaches to forecast, schedule and optimize all daily maintenance tasks and long-term repair needs.

- Streamlined operations and maintenance: Minimize the likelihood of equipment failure, anticipate breakdowns and perform preventive maintenance when possible. Today’s top EAM systems dramatically improve the decision-making capabilities of managers, operators and maintenance technicians by giving them real-time visibility into equipment status and workflows.

- Reduced maintenance costs and downtime: Monitor assets in real time, regardless of complexity. By coupling asset information (thanks to the Internet of Things (IoT)) with powerful analytics capabilities, businesses can now perform cost-effective preventive maintenance, intervening before a critical asset fails and preventing costly downtime.

- Greater alignment across business units: Optimize management processes according to a variety of factors beyond just the condition of a piece of equipment. These factors can include available resources (e.g., capital and manpower), projected downtime and its implications for the business, worker safety, and any potential security risks associated with the repair.

- Improved compliance: Comply with laws surrounding the management and operation of assets, regardless of where they are located. Data management and storage requirements vary widely from country to country and are constantly evolving. Avoid costly penalties by monitoring assets in a strategic, systematized manner that ensures compliance—no matter where data is being stored.

Enterprise asset management systems (EAMs)

Computerized maintenance management systems (CMMS)

Preventive maintenance

Asset tracking

- Radio frequency identifier tags (RFID): RFID tags broadcast information about the asset they’re attached to using radio-frequency signals and Bluetooth technology. They can transmit a wide range of important information, including asset location, temperature and even the humidity of the environment the asset is located in.

- WiFi-enabled tracking: Like RFIDs, WiFi-enabled tracking devices monitor a range of useful information about an asset, but they only work if the asset is within range of a WiFi network.

- QR codes: Like their predecessor, the universal barcode, QR codes provide asset information quickly and accurately. But unlike barcodes, they are two-dimensional and easily readable with a smartphone from any angle.

- Global positioning satellites (GPS): With a GPS system, a tracker is placed on an asset that then communicates information to the Global Navigation Satellite System (GNSS) network. By transmitting a signal to a satellite, the system enables managers to track an asset anywhere on the globe, in real time.

Asset lifecycle management strategy solutions

Saturday, 18 November 2023

Creating a sustainable future with the experts of today and tomorrow

Equipping the current and future workforce with green skills

Uniting sustainability experts in strategic partnerships

- The University of Sharjah will build a model and application to monitor and forecast water access conditions in the Middle East and North Africa to support communities in arid and semi-arid regions with limited renewable internal freshwater resources.

- The University of Chicago Trust in Delhi will aggregate water quality information in India, build and deploy tools designed to democratize access to water quality information, and help improve water resource management for key government and nonprofit organizations.

- The University of Illinois will develop an AI geospatial foundation model to help predict rain fall and flood forecasting in mountain headwaters across the Appalachian Mountains in the US.

- Instituto IGUÁ will create a cloud-based platform for sanitation infrastructure planning in Brazil alongside local utility providers and governments.

- Water Corporation will design a self-administered water quality testing system for Aboriginal communities in Western Australia.

Supporting a just transition for all

Friday, 17 November 2023

An introduction to Wazi as a Service

In today’s hyper-competitive digital landscape, the rapid development of new digital services is essential for staying ahead of the curve. However, many organizations face significant challenges when it comes to integrating their core systems, including Mainframe applications, with modern technologies. This integration is crucial for modernizing core enterprise applications on hybrid cloud platforms. Shockingly, a staggering 33% of developers lack the necessary skills or resources, hindering their productivity in delivering products and services. Moreover, 36% of developers struggle with the collaboration between development and IT Operations, leading to inefficiencies in the development pipeline. To compound these issues, repeated surveys highlight “testing” as the primary area causing delays in project timelines. Companies like State Farm and BNP Paribas are taking steps to standardize development tools and approaches across their platforms to overcome these challenges and drive transformation in their business processes.

How does Wazi as Service help drive modernization?

What are the benefits of Wazi as a service on IBM Cloud?

How IBM Cloud Financial Service Framework help in industry solutions?

Get to know Wazi as a Service

Thursday, 16 November 2023

Leveraging IBM Cloud for electronic design automation (EDA) workloads

Electronic design automation (EDA) is a market segment consisting of software, hardware and services with the goal of assisting in the definition, planning, design, implementation, verification and subsequent manufacturing of semiconductor devices (or chips). The primary providers of this service are semiconductor foundries or fabs.

While EDA solutions are not directly involved in the manufacture of chips, they play a critical role in three ways:

1. EDA tools are used to design and validate the semiconductor manufacturing process to ensure it delivers the required performance and density.

2. EDA tools are used to verify that a design will meet all the manufacturing process requirements. This area of focus is known as design for manufacturability (DFM).

3. After the chip is manufactured, there is a growing requirement to monitor the device’s performance from post-manufacturing test to deployment in the field. This third application is referred to as silicon lifecycle management (SLM).

The increasing demands for computers to match the higher fidelity simulations and modeling workloads, more competition, and the need to bring products to market faster mean that EDA HPC environments are continually growing in scale. Organizations are looking at the best leveraging technologies—such as accelerators, containerization and hybrid cloud—to gain a competitive computing edge.

EDA software and integrated circuit design

Electronic design automation (EDA) software plays a pivotal role in shaping and validating cutting-edge semiconductor chips, optimizing their manufacturing processes, and ensuring that advancements in performance and density are consistently achieved with unwavering reliability.

The expenses associated with acquiring and maintaining the necessary computing environments, tools and IT expertise to operate EDA tools present a significant barrier for startups and small businesses seeking entry into this market. Simultaneously, these costs remain a crucial concern for established firms implementing EDA designs. Chip designers and manufacturers find themselves under immense pressure to usher in new chip generations that exhibit increased density, reliability, and efficiency and adhere to strict timelines—a pivotal factor for achieving success.

This challenge in integrated circuit (IC) design and manufacturing can be visualized as a triangular opportunity space, as depicted in the figure below:

EDA industry challenges

Leveraging cloud computing to speed time to market

How IBM is leading EDA

Thursday, 9 November 2023

AI assistants optimize automation with API-based agents

Training and enriching API-based agents for target use cases

Orchestrating multiple agents to automate complex workflows

Wednesday, 8 November 2023

IBM and Microsoft work together to bring Maximo Application Suite onto Azure

Maximo ManagePlus highlights

Key Benefits of running Maximo Application Suite on the Microsoft Azure Platform

- Provide your enterprise MAS users with 24×7 access to MAS application experts.

- Increase resolution of L2/L3 service requests.

- Increase your enterprise application support without expanding your application support team.

- Run your enterprise MAS application on underlying Azure infrastructure to continuously monitor and patch. This ensures that your hybrid cloud operations team can focus on other Azure workloads.

- Increase your Azure workload without expanding your cloud operations team.

- Streamline time-consuming work.

- Manage people, process and tools with a proven track record to exceed MAS application and infrastructure availability SLO. Can your enterprise afford unplanned asset management system downtime?

- Improve the stability of SLOs & service level agreements (SLAs).

- Subscribe to Maximo ManagePlus to improve application and infrastructure patching cadence and automate endpoint security configuration.

- Subscribe to Maximo ManagePlus to yield savings that the client can invest in new projects that target reducing bottom-line expenses or create/evolve brand differentiating, revenue generating products and services.

Act now with Maximo Application Suite on Azure

Tuesday, 7 November 2023

Child support systems modernization: The time is now

- Empower families to get help when, where and how they need it, including via virtual and real-time communication mechanisms.

- Provide quick and transparent services to ease family stress and frustration in times of need.

- Be intuitive and user-friendly in order to reduce inefficiencies and manual effort for both families and caseworkers.

- Automate routine tasks to allow caseworkers to provide more personalized services and build relationships with families.

- Empower caseworkers with online tools to collaborate with colleagues and access knowledge repositories.

State-level challenges and requirements

- Envisioning a holistic, family-focused model for service delivery that is personal, customized, and collaborative (rather than the “one-size-fits-all” process that ends up not fitting anyone very well).

- Providing more time for caseworkers to engage and collaborate with families by freeing them from inefficient system interfaces and processes.

- Utilizing the capabilities of modern technology stacks rather than continuing to use outdated and limited applications developed on existing systems.

- Upgrading their systems to leverage widely available and competitively priced technology skillsets rather than paying for the scarce, expensive skills required for existing system support.

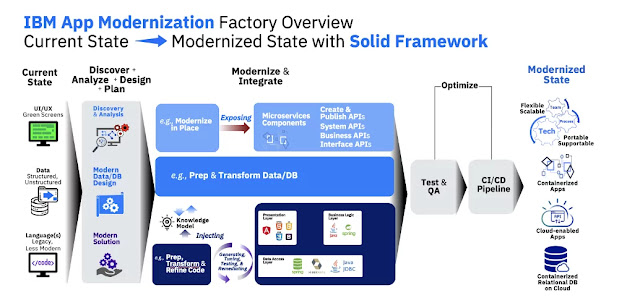

Accelerated Incremental Mainframe Modernization (AIMM)

- IBM Garage Methodology: IBM’s engagement and operating methodology (which brings together industry best practices, technical expertise, systems knowledge, client collaboration and partnership, cloud service providers and the consulting teams) uses a design strategy with iterative creation and launch processes to deliver accelerated outcomes and time to value.

- IBM Consulting Cloud Accelerator (ICCA): This approach accelerates cloud adoption by creating a “wave plan” for migrating and modernizing workloads to cloud platforms. ICCA integrates and orchestrates a wide range of migration tools across IBM’s assets and products, open source components and third-party tools to take a workload from its original platform to a cloud destination.

- Asset Analysis Renovation Catalyst (AARC): This tool automatically extracts business rules from application source code and generates a knowledge model that can be deployed into a rules engine such as Operational Decision Manager (ODM).

- Operational Decision Manager: This business rule management system enables automated responses to real-time data by applying automated decisions based on business rules. ODM enables business users to analyze, automate and govern rules-based business decisions by developing, deploying and maintaining operational system decision logic.

Hybrid cloud architectures for balanced transformation

Technical patterns for cloud migration

- Pattern 1: Migration to cloud with middleware emulator. With this approach, an agency’s systems are migrated to a cloud platform with minimal to no code alterations. The integration of middleware emulators minimizes the need for code changes and ensures smooth functionality during the migration process.

- Pattern 2: Migration to cloud with code refactoring. This approach couples the migration of systems to a cloud environment with necessary code modifications for optimal performance and alignment with cloud architectures. IBM has a broad ecosystem of partners who specialize in using automated tools to make most code modifications.

- Pattern 3: Re-architect and modernize with microservices. This strategy encompasses re-architecting systems with the adoption of microservices-based information delivery channels. This approach modernizes systems in cloud-based architectures which enable efficient communication between the microservices.

- Pattern 4: Cloud data migration for analytics and insights. This strategy focuses on transferring existing data to the cloud and facilitating generation of advanced data analytics and insights, a key feature of modernized systems.

Maintaining business functions across technical migrations

Saturday, 4 November 2023

Apache Kafka and Apache Flink: An open-source match made in heaven

Apache Kafka and Apache Flink working together

Innovating on Apache Flink: Apache Flink for all

“We realize investing in event-driven architecture projects can be a considerable commitment, but we also know how necessary they are for businesses to be competitive. We’ve seen them get stuck all-together due to costs and skills constrains. Knowing this, we designed IBM Event Automation to make event processing easy with a no-code approach to Apache Flink It gives you the ability to quickly test new ideas, reuse events to expand into new use cases, and help accelerate your time to value.”