The new era of generative AI has spurred the exploration of AI use cases to enhance productivity, improve customer service, increase efficiency and scale IT modernization.

Recent research commissioned by IBM® indicates that as many as 42% of surveyed enterprise-scale businesses have actively deployed AI, while an additional 40% are actively exploring the use of AI technology. But the rates of exploration of AI use cases and deployment of new AI-powered tools have been slower in the public sector because of potential risks.

However, the latest CEO Study by the IBM Institute for the Business Value found that 72% of the surveyed government leaders say that the potential productivity gains from AI and automation are so great that they must accept significant risk to stay competitive.

Driving innovation for tax agencies with trust in mind

Tax or revenue management agencies are a part of the public sector that might likely benefit from the use of responsible AI tools. Generative AI can revolutionize tax administration and drive toward a more personalized and ethical future. But tax agencies must adopt AI tools with adequate oversight and governance to mitigate risks and build public trust.

These agencies have a myriad of complex challenges unique to each country, but most of them share the goal of increasing efficiency and providing the transparency that taxpayers demand.

In the world of government agencies, risks associated to the deployment of AI present themselves in many ways, often with higher stakes than in the private sector. Mitigating data bias, unethical use of data, lack of transparency or privacy breaches is essential.

Governments can help manage and mitigate these risks by relying on IBM’s five fundamental properties for trustworthy AI: explainability, fairness, transparency, robustness and privacy. Governments can also create and execute AI design and deployment strategies that keep humans at the hearth of the decision-making process.

Exploring the views of global tax agency leaders

To explore the point of view of global tax agency leaders, the IBM Center for The Business of Government, in collaboration with the American University Kogod School of Business Tax Policy Center, organized a series of roundtables with key stakeholders and released a report exploring AI and taxes in the modern age. Drawing on insights from academics and tax experts from around the world, the report helps us understand how these agencies can harness technology to improve efficiencies and create a better experience for taxpayers.

The report details the potential benefits of scaling the use of AI by tax agencies, including enhancing customer service, detecting threats faster, identifying and tackling tax scams effectively and allowing citizens to access benefits faster.

Since the release of the report, a subsequent roundtable allowed global tax leaders to explore what is next in their journey to bring tax agencies around the globe closer to the future. At both gatherings, participants emphasized the importance of effective governance and risk management.

Responsible AI services improve taxpayer experiences

According to the FTA’s Tax Administration 2023 report, 85% of individual taxpayers and 90% of businesses now file taxes digitally. And 80% of tax agencies around the world are implementing leading-edge techniques to capture taxpayer data, with over 60% using virtual assistants. The FTA research indicates that this represents a 30% increase from 2018.

For tax agencies, virtual assistants can be a powerful way to reduce waiting time to answer citizen inquiries; 24/7 assistants, such as ™’s advanced AI chatbots, can help tax agencies by decentralizing tax support and reducing errors to prevent incorrect processing of tax filings. The use of these AI assistants also helps streamline fast, accurate answers that deliver elevated experiences with measurable cost savings. It also allows for compliance-by-design tax systems, providing early warnings of incidental errors made by taxpayers that can contribute to significant tax losses for governments if left unresolved.

However, these advanced AI and generative AI applications come with risks, and agencies must address concerns around data privacy and protection, reliability, tax rights and hallucinations from generative models.

Furthermore, biases against marginalized groups remain a risk. Current risk mitigation strategies (including having human-in-system roles and robust training data) are not necessarily enough. Every country needs to independently determine appropriate risk management strategies for AI, accounting for the complexity of their tax policies and public trust.

What’s next?

Whether using existing large language models or creating their own, global tax leaders should prioritize AI governance frameworks to manage risks, mitigate damage to their reputation and support their compliance programs. This is possible by training generative AI models using their own quality data and by having transparent processes with safeguards that identify and alert for risk mitigation and for instances of drift and toxic language.

Tax agencies should make sure that technology delivers benefits and produces results that are transparent, unbiased and appropriate. As leaders of these agencies continue to scale the use of generative AI, IBM can help global tax agency leaders deliver a personalized and supportive experience for taxpayers.

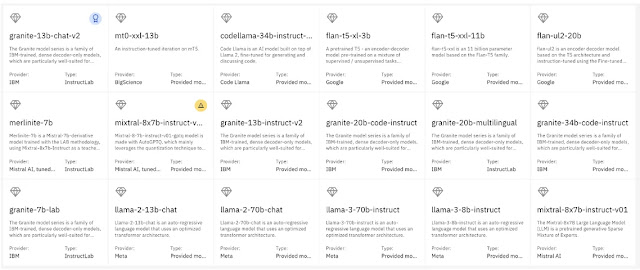

IBM’s decades of work with the largest tax agencies around the world, paired with leading AI technology with watsonx™ and watsonx.governance™, can help scale and accelerate the responsible and tailored deployment of governed AI in tax agencies.

Source: ibm.com