Saturday 27 April 2024

Bigger isn’t always better: How hybrid AI pattern enables smaller language models

Thursday 25 April 2024

5 steps for implementing change management in your organization

Steps to support organizational change management

How to ensure success when implementing organizational change

Tuesday 23 April 2024

Deployable architecture on IBM Cloud: Simplifying system deployment

- Automation: Deployable architecture often relies on automation tools and processes to manage deployment process. This can involve using tools like continuous integration or continuous deployment (CI/CD) pipelines, configuration management tools and others.

- Scalability: The architecture is designed to scale horizontally or vertically to accommodate changes in workload or user demand without requiring significant changes to the underlying infrastructure.

- Modularity: Deployable architecture follows a modular design pattern, where different components or services are isolated and can be developed, tested and deployed independently. This allows for easier management and reduces the risk of dependencies causing deployment issues.

- Resilience: Deployable architecture is designed to be resilient, with built-in redundancy and failover mechanisms that ensure the system remains available even in the event of a failure or outage.

- Portability: Deployable architecture is designed to be portable across different cloud environments or deployment platforms, making it easy to move the system from one environment to another as needed.

- Customisable: Deployable architecture is designed to be customisable and can be configured according to the need. This helps in deployment in diverse environments with varying requirements.

- Monitoring and logging: Robust monitoring and logging capabilities are built into the architecture to provide visibility into the system’s behaviour and performance.

- Secure and compliant: Deployable architectures on IBM Cloud® are secure and compliant by default for hosting your regulated workloads in the cloud. It follows security standards and guidelines, such as IBM Cloud for Financial Services® , SOC Type 2, that ensures the highest levels of security and compliance requirements.

Deployable architectures on IBM Cloud

Deployment strategies for deployable architecture

Use-cases of deployable architecture

- Software developers, IT professionals, system administrators and business stakeholders who need to ensure that their systems and applications are deployed efficiently, securely and cost-effectively. It helps in reducing time to market, minimizing manual intervention and decreasing deployment-related errors.

- Cloud service providers, managed service providers and infrastructure as a service (IaaS) providers to offer their clients a streamlined, reliable and automated deployment process for their applications and services.

- ISVs and enterprises to enhance the deployment experience for their customers, providing them with easy-to-install, customizable and scalable software solutions that helps driving business value and competitive advantage.

Saturday 20 April 2024

The journey to a mature asset management system

Asset management and technological innovation

The asset management maturity journey

Friday 19 April 2024

Using dig +trace to understand DNS resolution from start to finish

First, let’s briefly review how a query recursive receives a response in a typical recursive DNS resolution scenario:

- You as the DNS client (or stub resolver) query your recursive resolver for www.example.com.

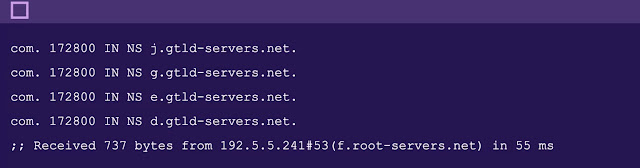

- Your recursive resolver queries the root nameserver for NS records for “com.”

- The root nameserver refers your recursive resolver to the .com Top-Level Domain (TLD) authoritative nameserver.

- Your recursive resolver queries the .com TLD authoritative server for NS records of “example.com.”

- The .com TLD authoritative nameserver refers your recursive server to the authoritative servers for example.com.

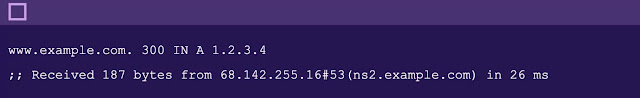

- Your recursive resolver queries the authoritative nameservers for example.com for the A record for “www.example.com” and receives 1.2.3.4 as the answer.

- Your recursive resolver caches the answer for the duration of the time-to-live (TTL) specified on the record and returns it to you.

Thursday 18 April 2024

Understanding glue records and Dedicated DNS

Domain name system (DNS) resolution is an iterative process where a recursive resolver attempts to look up a domain name using a hierarchical resolution chain. First, the recursive resolver queries the root (.), which provides the nameservers for the top-level domain(TLD), e.g.com. Next, it queries the TLD nameservers, which provide the domain’s authoritative nameservers. Finally, the recursive resolver queries those authoritative nameservers.

In many cases, we see domains delegated to nameservers inside their own domain, for instance, “example.com.” is delegated to “ns01.example.com.” In these cases, we need glue records at the parent nameservers, usually the domain registrar, to continue the resolution chain.

What is a glue record?

Glue records are DNS records created at the domain’s registrar. These records provide a complete answer when the nameserver returns a reference for an authoritative nameserver for a domain. For example, the domain name “example.com” has nameservers “ns01.example.com” and “ns02.example.com”. To resolve the domain name, the DNS would query in order: root, TLD nameservers and authoritative nameservers.

When nameservers for a domain are within the domain itself, a circular reference is created. Having glue records in the parent zone avoids the circular reference and allows DNS resolution to occur.

Glue records can be created at the TLD via the domain registrar or at the parent zone’s nameservers if a subdomain is being delegated away.

When are glue records required?

Glue records are needed for any nameserver that is authoritative for itself. If a 3rd party, such as a managed DNS provider hosts the DNS for a zone, no glue records are needed.

IBM NS1 Connect Dedicated DNS nameservers require glue records

IBM NS1 Connect requires that customers use a separate domain for their Dedicated DNS nameservers. As such, the nameservers within this domain will require glue records. Here, we see glue records for exampledns.net being configured in Google Domains with random IP addresses:

Once the glue records have been added at the registrar, the Dedicated DNS domain should be delegated to the IBM NS1 Connect Managed nameservers and the Dedicated DNS nameservers. For most customers, there will be a total of 8 NS records in the domain’s delegation.

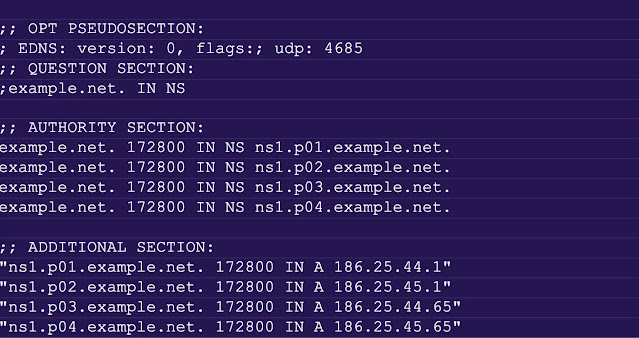

What do glue records look like in the dig tool?

Glue records appear in the ADDITIONAL SECTION of the response. To see a domain’s glue records using the dig tool, directly query a TLD nameserver for the domain’s NS record. The glue records in this example are in quotation marks. Quotation marks are used for emphasis below: