IBM Quantum announced Eagle, a 127-qubit quantum processor based on the transmon superconducting qubit architecture. The IBM Quantum team adapted advanced semiconductor signal delivery and packaging into a technology node to develop superconducting quantum processors.

Quantum computing hardware technology is making solid progress each year. And more researchers and developers are now programming on cloud-based processors, running more complex quantum programs. In this changing environment, IBM Quantum releases new processors as soon as they pass through a screening process so that users can run experiments on them. These processors are created as part of an IBM Quantum is constantly at work improving the performance of quantum computers by developing the fastest, highest-quality systems with the most number of qubits. Read more.agile hardware development methodology, where multiple teams focus on pushing different aspects of processor performance — scale, quality, and speed — in parallel on different experimental processors, and where lessons learned combine them in later revisions.

Today, IBM Quantum’s systems include our 27-qubit Falcon processors, our 65-qubit Hummingbird processors, and now our 127-qubit Eagle processors. At a high level, we benchmark our three key performance attributes on these devices with three metrics:

◉ We measure scale in number of qubits,

◉ Quality in Quantum Volume,

◉ And speed in CLOPS, or circuit layer operations per second.

The current suite of processors have scales of up to Eagle’s 127 qubits, Quantum Volumes of up to 128 on the Falcon r4 and r5 processors, and can run as many a 2,400 Circuit Layer Operations per second on the Falcon r5 processor Scientists have published over 1,400 papers on these processors by running code on them remotely on the cloud.

During the five months since the initial Eagle release, the IBM Quantum team has had a chance to analyze Eagle’s performance, compare it to the performance of previous processors such as the IBM Quantum Falcon, and integrate lessons learned into further revisions. At this most-recent APS March, we presented an in-depth look into the technologies that allowed the team to scale to 127 qubits, comparisons between Eagle and Falcon, and benchmarks of the most recent Eagle revision.

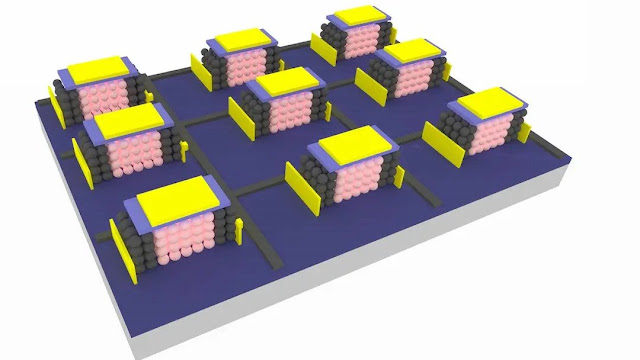

Multi-layer wiring & through-silicon vias

As with all of our processors, Eagle relies on an architecture of superconducting transmon qubits. These qubits are anharmonic oscillators, with anharmonicity introduced by the presence of Josephson junctions, or gaps in the superconducting circuits acting as non-linear inductors. We implement single-qubit gates with specially tuned microwave pulses, which introduce superposition and change the phase of the qubit’s quantum state. We implement two-qubit entangling gates using tunable microwave pulses called cross resonance gates, which irradiate the control qubit at the at the transition frequency of the target qubit. Performing these microwave activated operations requires that we be able to deliver microwave signals in a high-fidelity, low-crosstalk fashion.

Eagle’s core technology advance is the use of what we call our third generation signal delivery scheme. Our first generation of processors comprised of a single layer of metal atop the qubit wafer and a printed circuit board. While this scheme works well for ring topologies — those where the qubits are arranged in a ring — it breaks down if there are any qubits in the center of the ring because we have no way to deliver microwave signals to them.

Our second generation of packaging schemes featured two separate chips, each with a layer of patterned metal, joined by superconducting bump bonds: a qubit wafer atop an interposer wafer. This scheme lets us bring microwave signals to the center of the qubit chip, “breaking the plane,” and was the cornerstone of the Falcon and Hummingbird processors. However, it required that all qubit control and readout lines were routed to the periphery of the chip, and metal layers were not isolated from each other.

A comparison of three generations of chip packaging.

For Eagle, as before, there is a qubit wafer bump-bonded to an interposer wafer. However, we have now added multi-layer wiring (MLW) inside the interposer. We route our control and readout signals in this additional layer, which is well-isolated from the quantum device itself and lets us deliver signals deep into large chips.

The MLW level consists of three metal layers, patterned, planarized dielectric between each level, and short connections called vias connecting the metal levels. Together these levels let us make transmission lines that are fully via fenced from each other and isolated from the quantum device. We also add through-substrate vias to the qubit and interposer chips.

In the qubit chips, these let us suppress box modes, which is sort of like the microwave version of a glass vibrating when you sing a certain pitch inside of it. They also let us build via fences — dense walls of vias — between qubits and other sensitive microwave structures. If they are much less than a wavelength apart, these vias act like a Faraday cage, preventing capacitive crosstalk between circuit elements. In the interposer chip, these vias play all the same roles, while also allowing us to get microwave signals up and down from the MLW to wherever we want inside of a chip.

Reduction in crosstalk

“Classical crosstalk” is an important source of errors for superconducting quantum computers. Our chips have dense arrays of microwave lines and circuit elements that can transmit and receive microwave energy. If any of these lines transmit energy to each other, a microwave tone that we apply and intend to go to one qubit will go to another.

However, for qubits coupled by busses to allow two-qubit gates, the desired quantum coupling from the bus can look similar to the undesirable effects of classical crosstalk. We use a method called Hamiltonian tomography in order to estimate the amount of effects from the coupling bus and subtract them from the total effects, leaving only the effects of the classical crosstalk.

By knowing the degree of this classical crosstalk, for coupled qubits (that is, linked neighbors) we can then even use a second microwave “cancellation” tone on the target qubit during two-qubit gates to remove some of the effects of classical crosstalk. In other circumstances it is impractical to compensate for this and classical crosstalk increases the error rate of our processors — often requiring a new processor revision.

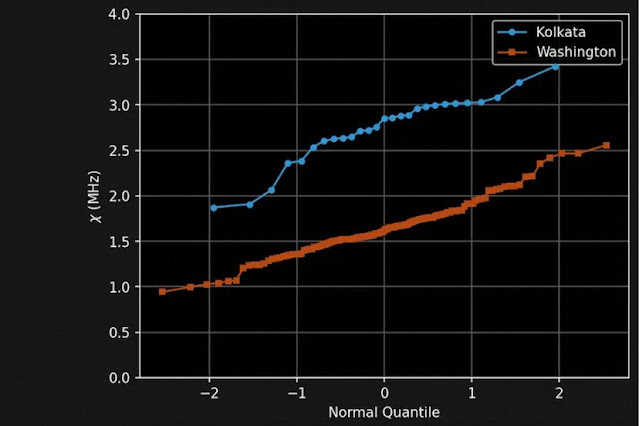

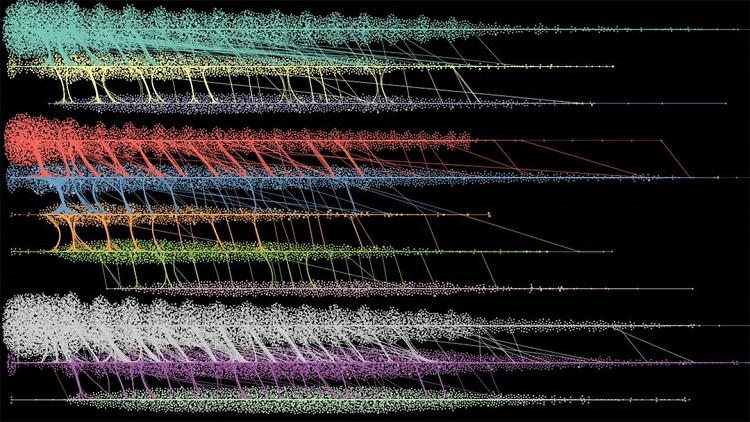

MLW and TSVs provide Eagle with a natural shielding against crosstalk. We can see in the figure, below, that despite having many more qubits and a more complicated signal delivery scheme, a much smaller fraction of qubits in Eagle have high crosstalk than they do in Falcon, and the worst case crosstalk is substantially smaller. These improvements were expected. In Falcon, without TSVs, wires run through the chip and can easily transfer energy to the qubits. With Eagle, each signal is surrounded by metal between the ground plane of the qubit chip and the ground plane of the top of the MLW level.

The amount of classical crosstalk on Falcon versus Eagle. Note that this is a quantile plot. The median value is at x=0.

For the qubits with the worst cross-talk, we see the same qubits have the worst cross-talk on two different Eagle chips. This is exciting as it suggests the worst cross-talk pairs are due to a design problem we haven’t solved yet — which we can correct in a future generation.

While we’re excited to make strides in handling crosstalk, there are challenges yet for the team to tackle. Eagle has 16,000 pairs of qubits. Finding those qubits with crosstalk of >1% would take a long time, about 11 days, and crosstalk can be non-local, and thus may arise between any of these qubit pairs. We can speed this up by running crosstalk measurements on multiple qubits at the same time. But ironically, that measurement can get corrupted if we choose to run in parallel on two pairs that have bad crosstalk with one each other. We’re still learning how to take these datasets in a reasonable amount of time and curate the enormous amount of resulting measurements so we can better understand Eagle, and to prepare ourselves for our future, even larger devices.

Measurements

Although we measure composite “quality” with Quantum Volume, there are many finer-grain metrics we use to characterize individual aspects of our device performance and guide our development, which we also track in Eagle.

Superconducting quantum processors face a variety of errors, including T1 (which measures relaxation from the |1⟩ to the |0⟩ state, Tϕ (which measures dephasing — the randomization of the qubit phase), and T2 (the parallel combination of T1 and Tϕ). These errors are caused by imperfect control pulses, spurious inter-qubit couplings, imperfect qubit state measurements, and more. They are unavoidable, but the threshold theorem states that we can build an error-corrected quantum computer provided we can decrease hardware error rates below a certain constant threshold. Therefore, a core mission of our hardware team is to improve the coherence times and fidelity of our hardware, while scaling to large enough processors so that we can perform meaningful error-corrected computations.

A core mission of our team is to improve the coherence times and fidelity of our hardware, while scaling to large enough processors to perform meaningful error-corrected computations.

Our initial measurements of Eagle’s T1 lagged behind the T1s of our Falcon r5 processors. Therefore, our two-qubit gate fidelities were also lower on Eagle than on Falcon. However, at the same time we were designing and building Eagle, we learned how to make a higher-coherence Falcon processor: Falcon r8.

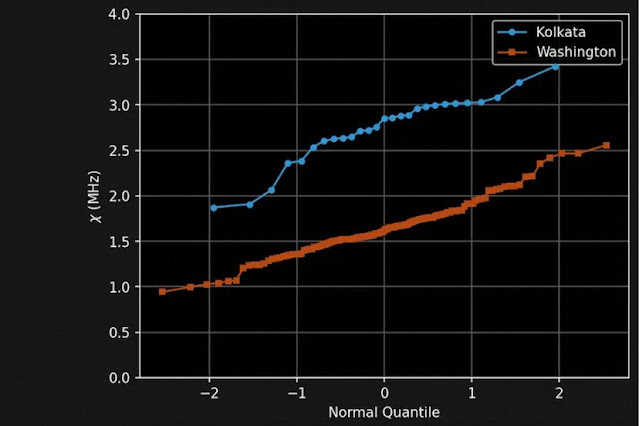

A wonderful example of the advantages of working on scale and quality in parallel then arose; we were able to incorporate these changes into Eagle r3, and now see the same coherence times in Eagle as Falcon r8. We expect that improvements in two-qubit gate fidelities to follow. One continuing focus of our studies into Eagle is performance metrics for readout. Two parameters govern readout: χ, the strength of the coupling of the qubit to the readout resonator, and κ, how quickly photons exit the resonator.

There are tradeoffs involved in selecting each of these parameters, and so the most important goal in our designs is to be able to accurately pick them, and make sure they have a small spread across the device. At present, Eagle is consistently and systematically undershooting Falcon devices on χ, as shown in the figure below — though we think we have a solution for this, planned for future revisions. Additionally, we are seeing a larger spread in κ in Eagle than in Falcon, where the highest-κ qubits are higher, and lowest-κ qubits are lower. We have identified that a mismatch between the frequency of the Purcell filter and the readout resonator frequency may be at play — and our hardware team is at work making improvements on this front, as well.

1) A comparison of kappa and chi values for a Falcon (Kolkata) and Eagle (Washington) chip.

2) A comparison of kappa and chi values for a Falcon (Kolkata) and Eagle (Washington) chip.

We see the first round of metrics as a grand success for this new processor. We’ve nearly doubled the size of our processors all while reducing crosstalk, improving coherence time; and readout is working well, with improvements left that we understand. Eagle also has improved measurement fidelity over the latest Falcons, with the caveat that the amount of time that measurement takes is different between the processors.

Outlook

Eagle demonstrates the power of applying the principles of agile development to research — in our first iteration of the device, we nearly doubled the processor scale, and made strides toward improved quality thanks to decreased crosstalk. Of course, we’re only just starting to tune the design of this processor. We expect that in upcoming revisions we will see further improvements in quality by targeting readout and frequency collisions.

All the while, we’re making strides advancing quantum computing overall. We’ve begun to measure coherence times of over 400 microseconds on our highest-performing processors — and continue to push toward some of our lowest-error two-qubit gates, yet.

We’ve begun to measure coherence times of over 400 microseconds on our highest-performing processors.

IBM Quantum is committed to following our roadmap to bring a 1,121 qubit processor online by 2023, continuing to delivering cutting-edge quantum research, and to pushing forward on scale, quality, and speed to deliver the best superconducting quantum processors available. Initial measurements of Eagle have demonstrated that we’re right on track.

Source: ibm.com