This non-von Neumann AI hardware breakthrough in neuromorphic computing could help create machines that recognize objects just like how humans do, and solve some hard mathematical problems for applications that span chip design to flights scheduling.

Human brains are extremely efficient at memorizing and learning things, thanks to how information is stored and processed by building blocks called synapses and neurons. Modern-day AI implements neural-network elements that emulate the biophysical properties of these building blocks.

Still, there are certain tasks that, while easy for humans, are computationally difficult for artificial networks, such as sensory perception, motor learning, or simply solving mathematical problems iteratively, where data streams are both continuous and sequential.

Take the task of recognizing a dynamically changing object. The brain has no problem recognizing a playful cat that morphs into different shapes or even hides behind a bush, showing just its tail. A modern-day artificial network, though, would work best if only trained with every possible transformation of the cat, which is not a trivial task.

Our team at IBM’s research lab in Zurich has developed a way to improve these recognition techniques by improving AI hardware. We’ve done it by creating a new form of an artificial synapse using the well-established phase-change memory (PCM) technology. In a recent Nature Nanotechnology paper, we detail our use of a PCM memtransistive synapse that combines the features of memristors and transistors within the same low-power device — demonstrating a non-von Neumann, in-memory computing framework that enables some powerful cognitive frameworks, such as the short-term spike-timing-dependent plasticity and stochastic Hopfield neural networks for useful machine learning.

More compute room at the nano-junction

The field of artificial neural networks dates to the 1940s when neurophysiologists portrayed biological computations and learning using bulky electrical circuits and generic models. While we have not significantly digressed from the underlying fundamental ideas of what neurons and synapses do, what is different today is our capability to build neuro-synaptic circuits at the nanoscale due to advances in lithography methods and the ability to train the networks with ever-growing quantities of training data.

The general idea of building AI hardware today is to make synapses, or synaptic junctions as they are called, smaller and smaller so that more of them fit on a given space. They are meant to emulate the long-term plasticity of biological synapses: The synaptic weights are stationary in time — they change only during updates.

This is inspired by the famous notion attributed to psychologist Donald O. Hebb that “neurons that fire together wire together using long-term changes in the synaptic strengths.” While this is true, modern neuroscience has taught us that there is much more going on at the synapses: neurons wire together with long- and short-term changes, as well as local and global changes in the synaptic connections. The ability to achieve the latter we discovered was the key to building the next generation of AI hardware.

Present-day hardware that can capture these complex plasticity rules and dynamics of the biological synapses rely on elaborate transistor circuits which are bulky and energy-inefficient for scalable AI processors. Memristive devices on the other hand are more efficient but they have traditionally lacked the features needed to capture the different synaptic mechanisms.

Synaptic Efficacy and Phase Change Memtransistors: The top panel is a representation of the biophysical long (LTP) and short (STP) plasticity mechanisms which change the strength of synaptic connections. The middle panel is an emulation of these mechanisms using our phase change memtransistive synapse. The bottom panel illustrates how by mapping the synaptic efficacy into the electrical conductance of a device, a range of different synaptic processes can be mimicked.

PCM plasticity mimics mammalian synapses

We set out to create a memristive synapse that can express the different plasticity rules, such as long-term and short-term plasticity and their combinations using commercial PCM — at the nanoscale. We achieved this by using the combination of the non-volatility (from amorphous-crystalline phase transitions) and volatility (from electrostatics change) in PCMs.

Phase change materials have been independently researched for memory and transistor applications, but their combination for neuromorphic computing has not been previously explored. Our demonstration shows how the non-volatility of the devices can enable long-term plasticity while the volatility provides the short-term plasticity and their combination allows for other mixed-plasticity computations, much like in the mammalian brain.

We began testing our idea toward the end of 2019, starting by using the volatility property in the devices for a different application: compensating for the non-idealities in phase change computational memories. Our team fondly remembers chatting over coffee, discussing the first experimental results that would allow us to take the next step of building the synthetic synapses we originally imagined. With some learning and trial and error during the pandemic, by early 2021 we had our first set of results and things worked out the way we had imagined.

To test the utility of our device, we implemented a curated form of an algorithm for sequential learning which our co-author, Timolean Moraitis, was developing to use spiking networks for learning dynamically changing environments. From this emerged our implementation of a short-term spike-timing-dependent plasticity framework that allowed us to demonstrate a biologically inspired form of sequentially learning on artificial hardware. Instead of the playful cat that we mentioned earlier, we showed how machine vision can recognize the moving frames of a boy and a girl.

Later, we expanded the concept to show the emulation of other biological processes for useful computations. Crucially, we showed how the homeostatic phenomena in the brain could provide a means to construct efficient artificial hardware for solving difficult combinatorial optimization problems. We achieved this by constructing stochastic Hopfield neural networks, where the noise mechanisms at the synaptic junction provide efficiency gains to computational algorithms.

Looking ahead to full-scale implementation

Our results are more exploratory than actual system-level demonstrations. In the latest paper, we propose a new synaptic concept that does more than a fixed synaptic weighing operation, as is the case in modern artificial neural networks. While we plan to take this approach further, we believe our existing proof-of-principle results already elucidate significant scientific interest in the broader areas of neuromorphic engineering — in computing and understanding the brain better from its more faithful emulations.

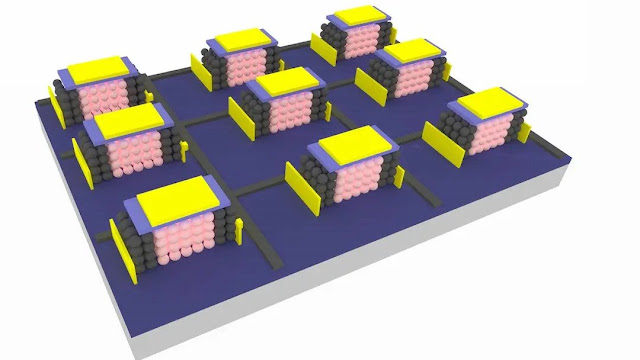

Our devices are based on a well-researched technology and are easy to fabricate and operate. The key challenge we see is the at-scale implementation, stringing together our computational primitive and other hardware blocks. Building a full-fledged computational core with our devices will require a rethinking of the design and implementation of peripheral circuitries.

Currently, these are standard to conventional memristive computational hardware: we require additional control terminals and resources for our devices to function. There are some ideas in place, such as redefining the layouts, as well as restructuring the basic device design, which we are currently exploring.

Our current results show how relevant mixed-plasticity neural computations can prove to be in neuromorphic engineering. The demonstration of sequential learning can allow neural networks to recognize and classify objects more efficiently. This not only makes, for example, visual cognition more human-like but also provides significant savings on the expensive training processes.

Our illustration of a Hopfield neural network allows us to solve difficult optimization problems. We show an example of max cut, which is a graph coloring problem and has utility in applications such as chip design. Other applications include problems like flight scheduling, internet packets routing, and more.

Source: ibm.com

0 comments:

Post a Comment